A defense of AI art

It's not just slop, it's not stolen, it's not bad for the environment, and we should want art to be easy to make

I’ve seen a lot of negativity about AI art. I don’t think it’s important that everyone like or experiment with AI art, but I do think that a lot of the critics are getting some basic facts wrong. Specifically, I think they’re misunderstanding the current quality of AI art, why AI doesn’t “steal” the art it trains on, and why it’s not bad for the environment. It’s fine to not like AI art, but it’s not fine to have these misunderstandings. I’ll address each in this post. A lot of people also bring up concerns about human artists losing work to AI. This is a more complex values question that I talk about in the last section.

I don’t use AI art much, but when I do it’s nice to easily create images I like looking at, even if I know they might not have a deeper meaning or intention behind them. I’m not trying to convert you to liking AI art, I just don’t think you should worry about it as a serious problem, and I want to share what I think is interesting about it.

The quality of AI art

Pleasant pictures vs. serious art

I think of there being 2 categories of art:

Art that's challenging or interesting or emotionally engaging

Art that's just pleasant to look at without any real depth

This post will only be about the second category. I haven’t seen any AI art that I’d consider challenging or interesting, but I’ve seen a lot that I find pleasant. I wouldn’t want to automate away art that’s intentionally created by a human to challenge me or draw me into ways of thinking about the world I couldn’t otherwise access, but a lot of my (and others’) engagement with art isn’t like that. A lot of everyday people are often just looking for pleasant images or sounds. It’s okay that people spend time listening to lofi hip hop beats when they want to relax, and it’s okay that it’s easy and cheap to mass produce those. I don’t see why we wouldn’t want to produce a lot of additional pleasant pictures on demand if it makes people happy.

It might be that human artists can use AI to produce challenging work that would fit the first category. In that case I’d consider the AI to be just another medium, like paint or clay, that the human is using and refining to get at a very specific aesthetic experience. I haven’t really seen that type of art yet but would be open to it if and when it arrives.

A lot of AI art is bad because the everyday people making it have mediocre taste

I think a lot of what gets shared as impressive examples of AI art is really ugly. It’s what critics call “AI slop.” Here are some examples:

Looking at what’s popular on MidJourney right now:

All these images are sickly sweet, weirdly shiny, and “cringe.” A lot of people who haven’t looked into AI art much assume that all of it has this “attempting to be epic” look, like something an 18 year old on Reddit would be excited about rather than a more serious adult with a more subtle appreciation for complex and challenging art. They’ll often say the sickly sweet AI art lacks human depth, and assume the reason it looks that way is that it was created by a machine rather than a human. I agree that the images above, and a lot of what’s shared as “impressive” AI art, looks like this. It’s bad, but I don’t think the reason it’s bad is because it was created by AI.

I think what’s actually happening here is that a lot of people sharing AI art are just average people with an average person’s level of appreciation for art, which is often pretty cringe and far from what artists and art critics would consider high quality. Obviously this is elitist, but I think it’s true.

If you look at communities of everyday people sharing art they like, it’s often pretty tacky and embarrassing. Where can we find some examples of everyday people sharing a lot of art they like? It’s important to not look at a community of artists or art critics, or people spending a lot of time trying to refine their own taste and display it, otherwise it’s not representative of what average people are looking for. We could look at a large forum of everyday people sharing desktop wallpapers they like. This is one of the most common ways everyday people display art. Artists and art critics won’t be the predominant users, it would be mostly everyday people sharing images that make them happy. What would this art look like? We can go to Reddit.com/r/wallpaper to find out. With almost 2 million users it’s the largest forum I know of for sharing images that everyday people enjoy looking at, with no competition to display their refined taste. Here are the top 5 most upvoted images on /r/wallpaper of all time:

I don’t like any of these images at all, and I’d go so far as to say that I’d be embarrassed to have them as my desktop background. It would signal that I’m not “with it” and that I’m lacking a depth of appreciation for art that mature adults have. Again, a very elitist thing to say, but I guess I’m just kind of a snob about the art I consume and surround myself with.

The same thing happens if we look at /r/art, with 22 million members. This one is more directly focused on art, but in my opinion the top images of all time also basically look like slop:

A lot of the AI art that’s getting made and shared is by people with an average level of art appreciation, so we should expect most of it to not fit our high standards.

When critics of AI art say that it feels inhuman and artificial, it’s hard not to assume that they would have the same reaction to the /r/art or /r/wallpaper pictures. I think what’s actually happening here is that critics of AI art are just reacting to the tackiness of everyday people’s taste, and incorrectly blaming AI for that tackiness. AI has in some ways democratized access to creating art, so we should assume that a lot of its output will be much lower quality by the standards of the elite.

The tackiness of common taste isn’t new. What would you guess is the most popular art print of the 20th Century, the one that the most Americans had hanging in their homes and offices? Maybe it was something that everyone knows, like a da Vinci painting, or something more bold and modern like Warhol’s Campbell’s Soup Cans, or something simple and calming like The Starry Night.

All of these are wrong.

The most popular art print of the 20th century, the one that the most Americans hung in their homes, was the 1922 painting Daybreak by Maxfield Parrish:

There was 1 print of this made for every 4 American homes in the 20th Century.

Something funny about Daybreak is that it has the feel of AI slop. It looks like it’s trying to be epic and over-the-top. The color scheme feels more like it’s supposed to just make my eyes happy rather than invoking a more subtle mood. The two figures even have a shine to them. This makes me suspect that what’s happening with AI slop is that the masses’ taste is, for the first time, being much more widely manufactured and shared, and the results aren’t pretty to me and other art snobs. The demand for slop was always high, it’s just finally being met with adequate supply. If you find yourself assuming that all AI art looks bad and cringe like this, consider that this might mostly be coming from a kind of elitist reaction to the tastes of everyday people and what they’re choosing to do with AI, not the full capabilities of the AI models themselves.

It might be that AI does have some inner tendency toward producing slop because it was trained on so many images. It’s very likely that a lot of the images it was trained on were just bad art!

If you play with an AI image model enough, it becomes easy to generate art that you like

These are some images I’ve generated on MidJourney that I personally enjoy looking at. I’ve posted more here. You might not like them! I’m using these as examples of what an AI image generator can make that doesn’t have the “inhuman slop” look to it, not examples of objectively great art.

Impressionist painting of Western Massachusetts

Minimalist painting of a forest

Photorealistic graffiti of a sphere

Watercolor farm

Watercolor cassettes

West Virginia painted in the style of Lawren Harris

Modern art painting of a city

Landscape architect sketch of a brutalist monument in a forest

If you don’t like these, try playing around with MidJourney for a while and see if you can make the type of images you like. If it doesn’t happen to work at first, experiment for a bit before giving up. Try getting used to the MidJourney controls and editor options.

My goal here isn’t to convert you to liking AI art, it’s just to show that the “slop” vibe of so much AI art isn’t built into the models themselves and they’re capable of a lot more.

I think we should be skeptical of simple humanist narratives that there’s something fundamentally magic happening in the human experience of art that AI can never access. Modern AI is built to pick up on extremely subtle vibes, patterns, and associations; not to impose clearly written explicit rules and algorithms. It shouldn’t be surprising that it can pick up on much more subtle decisions human artists make and recreate them without being able to clearly articulate what it’s doing. I worry that people saying that AI art is all low quality because it’s lacking some mystical human element might just be imagining that humans have some deep magic that machines can’t eventually access. Because I don’t believe in magic, I don’t believe that.

I don’t think AI models can produce art at the level of the best artists right now, or be as creative and groundbreaking as human artists, but I do think they can produce images at a higher aesthetic level than what most humans (including me) can create. This seems useful and exciting and opens up a new world of fun ways to play with and generate aesthetic experiences.

Death of the author

When I went to college, if you didn’t believe in “the death of the author” you were considered kind of dumb. The idea is that critics should not defer to an artist or author’s intentions for their art as the final arbiter of what the art itself means. Art was understood as having a life of its own independent of the person who happened to create it. This implies to me that we should be more willing to engage with images on their own merits rather than looking for the deep intentions of the human who created them. If you accept the death of the author thesis, it seems silly to declare that only humans can produce “real art.” If we assume that the artist’s intentions don’t matter and we should allow ourselves to be affected by the piece as it exists on its own, we should be more open to work produced by machines without human intentions at all.

Sometimes machine-like inhuman art can be great!

It’s hard not to think that a lot of people criticizing art because it was made by a machine would have a similar negative reaction to some of the best artists of the 20th Century. Andy Warhol specifically presented his art as a challenge to the idea of individual artistic genius, treating art as a process that could be mechanized and even depersonalized. His Factory churned out silkscreens in a way that intentionally blurred the line between mass production and fine art, and he openly embraced the idea that his work was about surface, repetition, and commercialism rather than deep personal expression. If Warhol could take mass-produced imagery, apply a process that involved minimal handcrafting, and call it art, why should AI art be any different?

Is AI art stolen?

AI doesn’t memorize the images it’s trained on and plagiarize them later

AI is trained on the work of millions of artists. It’s fed an incredible number of images from across the web to build their intuitions about what we want it to produce, and then produces art based on the vibes it picked up from those images. Is this a form of theft against the human artists?

What normally counts as plagiarism

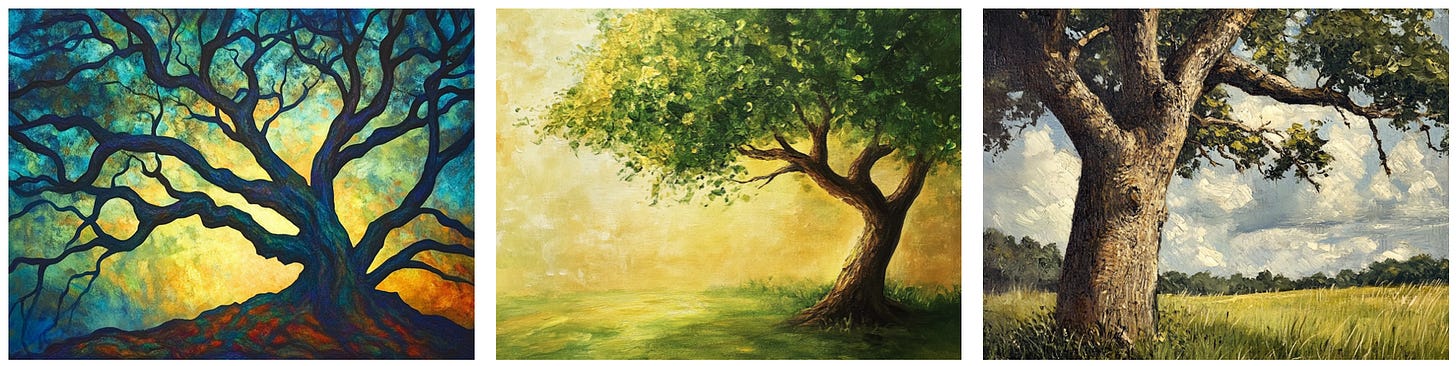

I go to an exhibit and see some paintings of trees that look like this:

I take inspiration from them and paint this image of a tree:

You’ll notice this tree has a bit of the vibe of each of the ones I saw at the exhibit. The branching pattern of the first, the basic color scheme of the second, the more thick trunk and surrounding trees of the third. Have I plagiarized the other artists’ work?

Obviously not. It’s very normal for artists to be inspired by other art they saw and incorporate the basic vibes they like into their own work.

Now instead I produce this painting:

This is very obviously plagiarizing the second painting from the exhibit. There are some extremely slight details that are different (falling leaves) but it’s so obviously a copy of what I saw that it’s a form of plagiarism.

What is the difference between these two? Basically what makes something plagiarism is whether you cross a line in how much you’re taking from a very specific piece of art, rather than a lot of different art. You can incorporate the vibes of a lot of previous pieces into a new piece, and that’s considered creative. However, if you only slightly modify a single piece and are clearly only drawing inspiration from that one piece, it’s plagiarism. I’ll stick to this definition of plagiarism for the rest of the post.

You may have correctly guessed that I generated all these images with AI. With the first example, I asked it to incorporate the general vibe of the original 3 trees into a new tree. It did a great job of incorporating the overall vibes without making an explicit copy. For the second, I asked it to make an almost exact copy of the second tree with some very slight changes. It did that well too.

My claim here will be that if AI works like the first example, where it picks up on the subtle interesting artistic vibes of a lot of different works and incorporates them into new works, it’s not stealing those works, in the same way I wouldn’t be stealing from art I was inspired by. It would be doing exactly what human artists do all the time to create new art. However, if it’s just storing a ton of images to individually copy when creating something new, it is stealing that art.

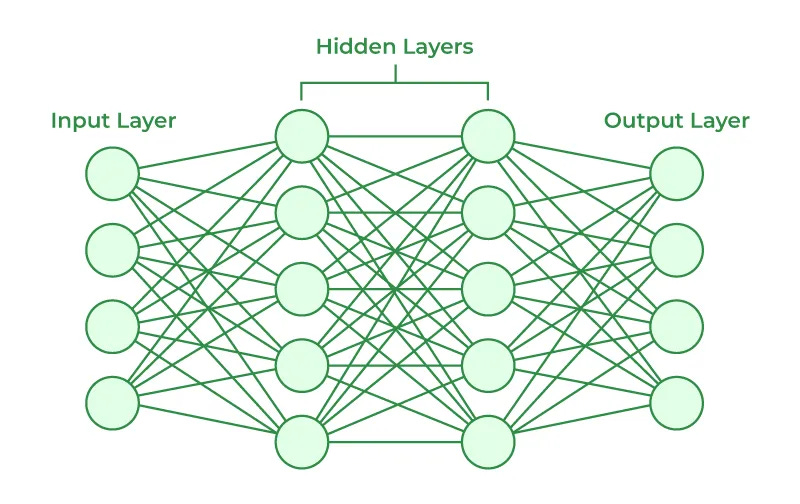

How AI models work: they’re entirely inspired by vibes

It’s really really worth taking some time to get a basic sense of how current AI models work. I have a separate post on how to do that. If you don’t want to read all that, I think the best single thing you can do is watch this 8 minute video:

A key takeaway from the video is that deep learning models do not save specific information in a specific place and then give it back to you later as output. I've found that a lot of people assume AI language models have every possible answer to every possible question memorized and stored in different files, and all the AI is doing is calling up those saved answers. This is wrong. People do the same for AI image generators. They assume the AI has every image it's trained on saved, and when it's asked for a specific image, it finds all the images it's trained on, modifies one a bit to avoid copyright issues, and then gives that one image to the user. This is also wrong. If this were true, the AI would be plagiarizing art in the same way my painter did in the last example: taking a single saved image someone else produced, modifying it slightly, and presenting it as the AI's own work. What's actually happening when AI trains is that it’s picking up on all the more subtle patterns and statistical relationships in millions of images or pieces of text, and then constructing new material based on these subtle patterns it’s picked up on, like the painter example where the painter is inspired by, but isn't directly copying any one of, the tree paintings.

When AI is trained on art, it doesn’t have anything that allows it to just “save” an image in the way we could save a file on our computer. Instead, it's given a bunch of examples of different types of art, and then it adjusts an incredibly large number of simple connections between "neurons" (simple computational units that process information by receiving inputs and producing outputs). These connections are called "weights." The latest image models have billions of these weights and similar values, so they can pick up on billions of individually subtle patterns and relationships in the data.

The motivation for using this type of model over specific instructions humans write is that we can allow it to train itself and pick up on subtle rules that we ourselves can't articulate through words or code. By continuously adjusting its weights and nodes as it trains on millions of examples until it can accurately predict what specific types of art are supposed to look like, it can learn an extremely large collection of subtle patterns, heuristics, and vague rules that we can also pick up on but can't articulate.

If you believe that AI training on artists’ work is equivalent to theft, you should also believe that it’s theft for a human to observe these paintings:

and then paint this:

The exact same thing is happening in both the human and AI case.

Why are we all suddenly defending intellectual property?

I was always at least a little sympathetic to more radical ideas about how there maybe shouldn't be any intellectual property at all. Property rights make sense when there are clear reasons why you would be harmed if someone else used something you own. I’m very happy that I have property rights to my toothbrush. I don’t want other people using it. Intellectual property is by definition not really a scarce resource once it’s created. If I write a blog post, I’m not affected by people copying and pasting it somewhere else. Maybe they would get some credit that I’d deserve instead, but that’s very different from me being deprived of something physical. I and others can still access the information whenever we want.

Internet piracy works in a similar way. If I walked into a store and stole a DVD, I’m depriving the store owner of a physical object they had to put a lot of effort into acquiring, and that now other people can’t use. If I take it, other people can’t use it. If I were to pirate the movie online, this is more like walking into the store, hovering my hand over the DVD, and creating an identical copy of the DVD out of thin air for myself, and leaving with the original DVD in its same place. I don’t deprive others of access to the DVD. The big issue with doing this is that I deprive the store owner and movie company of the reward for making and distributing the DVD in the first place.

I’ve known a lot of people who are very pro internet piracy but for some reason very anti AI models “stealing” art. This is confusing to me. Either you believe in intellectual property rights or you don’t. The best explanation is that these people might think that by pirating movies they’re attacking large corporations, whereas stealing art harms individual creators, but this seems like a pretty blurry arbitrary line. Many individual artists work for movie companies and pour a lot of hard artistic work into what they do.

While I’m against internet piracy, I think there are good arguments for significantly relaxing intellectual property protections. The main argument for having some IP rights (incentivizing creation of valuable intellectual work) is convincing, but I worry there are too many cases where IP rights are clearly disincentivizing new creative work. However, even if I didn’t want to reduce IP protections, the call for art be “protected” from training AI is not only a demand to keep IP protections as they exist, it’s a call to radically expand IP protections. It’s equivalent to requiring everyone viewing art on a website to sign a legal document saying that not only that they won’t directly copy the art they’re looking at, they won’t even produce anything inspired by the general vibe of what they’re seeing. This would radically restrict the art that gets made. I would personally like a lot more (and more varied) art to exist, and would like our IP laws to incentivize that, regardless of who (or what) is producing the art in the first place.

AI art’s energy use

MIT Technology Review found that AI image generators use 1.22 Wh per prompt.

For a comparison, instead of generating an image with MidJourney, using the same energy you could:

Run a page on a laptop for 2 minutes. If it takes you 10 minutes to read this blog post, for the same energy you could have generated 5 AI art images. If you don’t feel bad about using energy to read this, you shouldn’t feel bad about using energy to generate AI art.

The cost of 1.22 Wh of energy where I live in Washington DC is $0.0002. If the datacenter generating AI art were in DC, you would need to generate 3300 AI art images to increase the datacenter’s energy bill by $1. The average DC household uses enough energy each month to generate 520,000 AI images. This is an incredibly small amount of energy and worrying about it will distract the climate movement from more serious problems.

How should we think about human artist job loss to AI?

Let’s imagine that we invent a way to pause time. It becomes extremely easy for anyone to spend as much time as they like pursuing hobbies they care about, and then unpause time to show off their new skills. Almost everyone spends a lot of their paused time becoming good artists. They get really good at expressing their exact taste using different mediums, to the point that it becomes easy for them.

As a result, the world has a lot more complex and varied art from almost everyone, from almost all perspectives and tastes. The supply of art explodes. This means that it becomes hard to make a living as an artist. Many artists who spent years refining their skills before the time pause was invented are now no better at art than everyday people. They still have their unique perspective and can share their art with others, but because it’s become so easy to access art from anyone and everyone, basically no one is willing to pay a lot of money for it.

In this world, there’s more art than ever, it’s not commoditized, everyone is able to express a much fuller sense of themselves and their individuality through art and learn a lot more about others via art that others make, and it is also mostly impossible to have a career as an artist. Former professional artists get other jobs and only do the art they care about on the side.

Let’s imagine now that you’re running society and have the choice to invent this technology to give people the ability to pause time and pursue what they like. Do you choose to do that?

I see the situation with artists and AI art as being more or less identical to this situation. AI art gives everyday people the ability to produce a lot more art that’s refined to their specific individual taste. It’s like we’ve magically given everyone a much greater artistic ability. It’s obviously different in the sense that they don’t have the same experience of putting the art together over time, so maybe we can think of it more like giving everyone a camera for the first time instead of having to paint and draw scenes that they like. There is no way we can give everyone much more artistic ability without also making it harder to make a living as an artist, in the same way making everyone a fantastic chef would reduce the demand for restaurants.

If you think this is silly because AI art is just punching words into a prompt and letting the AI do the art for you, I don’t think you understand how current AI art tools actually work. They give everyday people a ton of editing power and ability to refine images and music and video over time to fit what they’re looking for. They make a lot of people happy and excited about the art they produce. This is a big deal! It’s like we’ve discovered a new magical artistic medium that a lot of people really enjoy using. Our baseline reaction to that should be positive.

I love art and the people who make it. I’m not out to undercut their livelihood. The problem is that progress in making a product more accessible by definition undercuts the livelihoods of the people who can only make a living if the product they’re selling is scarce. To solve this problem I’d be excited to either redistribute wealth to give people more time to pursue art, or help them find new fulfilling jobs, but I’m not going to ask to pause a technology that’s making the art itself much more available for everyday people to create.

People will often talk about problems with new technology without being clear that the problem is that the technology is making everyday people too free and able to do what they want. Everyday people being given inexpensive powerful tools to do what they want is extremely good, and it also creates problems. The reason why deceptively edited AI videos have become a widespread problem isn’t that there’s something evil lurking in the AI video editors themselves, it’s that AI video editor programs are just very effective and easy to use, and have made it easy for everyday people to basically become special effects artists and video editors quickly. A lot of new societal problems happen when everyday people become more free and empowered to pursue what they want, because some of those people will have bad intentions, but except for extreme cases we should always be pro empowerment and freedom for everyday people and deal with the risks that come with that after. We should think about AI art in the same way. Most of the bad effects of AI art on artists are coming from the fact that AI makes it much much easier for everyday people to create art they like. This is a downside of giving people more freedom. We should learn to live with the downsides of freedom.

In general, there will usually be way more positive spillover effects after automation makes it much easier to produce a lot more of a scarce resource than the negative effects on specific people who might lose work. We should acknowledge the benefits of a new medium that lets everyday people produce an incredible amount of very specific and personal art as if they’ve had years of practice. It could usher in a world where everyone has much easier access to art, at the expense of the people who are (very justifiably!) benefited by the scarcity of artistic talent.

AI art and creativity

It’s a philosophical question whether people are “creative” with AI art, or “make AI” AI art in the first place these, or whether it counts as art at all. When I generate AI art, I think of it as if I “found” images in MidJourney’s vector space. I’m excited that I found them in the same way I’d be excited about finding a neat rock. I don’t think much of my own creativity went into it, and I know it’s different from creating art myself. Curating AI art feels kind of like curating a Pinterest board. I think that can be a neat way to spend time to develop and show off your own taste even if you’re not putting any creative effort into the images themselves.

Conclusion

To summarize my points:

AI art models mostly produce slop because it’s what everyday people want.

It’s easy to make AI produce better art, you just need to prompt it yourself instead of relying on the taste of the average user.

AI doesn’t “steal” art by any reasonable definition.

Intellectual property rights would need to be expanded so much to stop AI from training on art that they would be draconian.

AI art isn’t bad for the environment and you would need to generate hundreds of thousands of images every month to have the same effect on the environment as a normal American household.

We shouldn’t entertain arguments against AI art that would also work against cheap, abundant, good art that anyone could participate in. Human artists are able to have careers because their talents are currently scarce. If the bad thing about AI art’s effect on artists is that it causes artistic talent to cease to be scarce, the good outweighs the bad.

One final point that didn’t fit anywhere else in the piece: I think a useful rule to follow is that the only fair criticisms of AI art shouldn’t also perfectly apply to:

Fan art

Photoshop

Photography

The mechanical reproduction of art prints

Everyone magically gaining a lot of artistic ability

Before criticizing AI art, just double check that your point wouldn’t also apply to each of these.

You should play around with ChatGPT’s image generator or MidJourney or other image generators if you haven’t yet! I didn’t even get to other forms of AI art like music. I’ve been having a lot of fun generating AI songs using Suno and uploading them here. I’d recommend starting with my Donald Rumsfeld musical. Here’s an album of chill AI background music I put on Spotify.

'I’ve known a lot of people who are very pro internet piracy but for some reason very anti AI models “stealing” art.'

Great to see someone make this point. As someone who makes and sells copyrighted content for a living, I've spent twenty years hearing from everyone online that piracy (i.e. swiping a copy of my work instead of paying me for it) is actually progressive and fine. Now the same voices are insisting that AI art (i.e. using a model where my work might represent one ten millionth of the training data) is an intolerable act of theft. That makes no sense to me. As a creator I would so much rather people use LLMs than use torrents.

I think this is another exceptionally well-researched, well-written and well-argued piece.

On the topic of whether AI art is stolen art, I agree with you, because I understand how LLMs work, but that is not how regular people think about this.

1. Regular people don't see further than, training input goes in and output comes out. The point that the model will pick up vibes from a multitude of artwork doesn't make this not stealing in their mind, it just steals from all of them. This view operates with a different definition, i.e. what makes it not stealing is the artist adding their unique individuality to the many influences they had. What this means is of course not well defined, nevertheless it will be very difficult to convince someone who operates under a different definition. So it would have been good to elaborate a bit on why stick to your definition.

2. Modern AI is not seen as something open and democratic, but controlled by big tech and as such exploiting people to make huge profits in the hands of few. Regardless of how much truth is in this view, people don't judge AI art in a vacuum just purely from a technological POV, but they consider the social context (which might in part also explain why piracy might be judged differently).