A few meta points on my posts on AI and the environment

Some quick notes on what I'm doing

These are a few clarifying points on my series on AI and the environment that I haven’t found a place for in the posts themselves.

Everything I’m saying is actually just a few points repeated over and over

There are actually just a few simple points I’m trying to drive home in all these posts, expressed over and over in different situations:

For your personal footprint, compare AI to your total carbon and water footprint and see if it has any effect at all. Don’t compare it to incredibly efficient tiny things like Google searches.

For local and global impact, compare AI to other industries and applications, not to your personal lifestyle. Manufacturing iPhones uses huge amounts of power in total. It would be ridiculous to compare manufacturing all iPhones to the number of flights an individual person takes. Creating a new product for a billion people is a more relevant comparison to training an AI model. Many data centers use the same amounts of water as other industries etc. Always contextualize large numbers.

The collective environmental footprint of society is made up of the footprints of individuals. We should behave in the ways we’d want the group to behave. We should prioritize the things that actually help climate the most, because some things have many orders of magnitude more effect than others.

All environmental and climate impacts of all industries are “new” in the sense that every day, all industries add new carbon emissions and air pollution to the environment. Treating AI data centers as special just because the buildings themselves are new normalizes much larger sources of emissions and harm that coincidentally were already happening.

AI and the environment is confusing to think about because of the environmental paradox of data centers: they both put uniquely large concentrated demand on local grids, but are also tiny efficient parts of the global energy grid. They can look big and evil on the ground, but are actually remarkably efficient.

In general, computing is one of the most resource-efficient parts of society.

Most of AI’s effects on the environment will probably be caused by how it’s used, not by the physical operation of the data centers where it’s run.

All of these points seem really simple individually, but I wasn’t finding any popular commentary on AI and the environment online that checked all these boxes in the way I wanted, which was a big motivation to post about it more. There are a lot of great industry reports written for more technical audiences, but a lot of the popular coverage I was reading went against basically all of these points.

Why these posts are so long

I’m capable of writing short posts. The reason the AI and environment posts tend to be so long is that for each one:

I find very few other places online collecting what I want to say about the topic in a single place. I figure it might be useful to other people to have these points collected in individual places rather than spread across 20 posts.

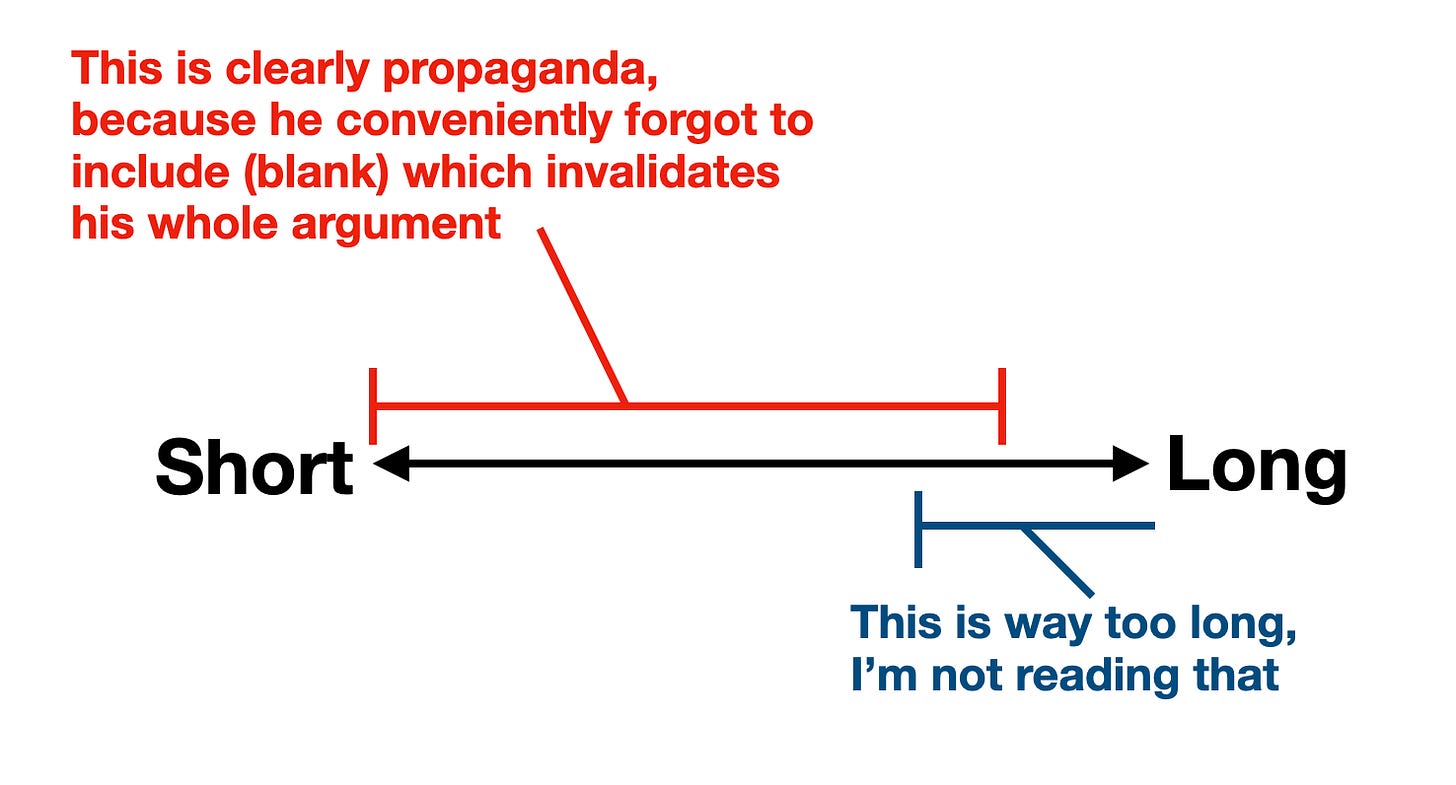

I’m faced with a choice. If critics read my post, they’re going to come away with one of two takes based on how much I write:

Between these two, I’d rather just aim beyond that red line, clearly label the contents of the post, give readers the option to skip around, and make it really long rather than come across as dodging some important point. Still, I find that a lot of people still announce that I’m dodging some point that I very clearly label. One of the most common reactions to my first post on this was “How convenient, he left out the cost of training where most of the energy is used” even though I had a clearly labeled training section. Can’t win ‘em all.

There is just a ton of nuance to get across in getting these basic points across, and I want to make it clear where I’m coming from. Since college I’ve worried that the environmentalist movement is too quick to label things “whataboutism” and was finding that was a very common objection, so getting down what I think about that and why I worry environmentalists overshoot here seems useful. I have a backlog of 15 years of following and reading about climate and environmentalist ethics, so I’d rather get it out there if it’s relevant.

I could just leave people with that list of basic ideas above, but I keep having a lot of positive reactions and feedback to each new post, so it seems like actually doing the deep dives is providing value.

This isn’t actually the single most important problem

The reason I’ve been writing about this a lot more recently is mainly that I’m finding I’m having some nonzero influence on the conversation and building up a big audience who seem to value it. Both my original post and cheat sheet post each have 100,000 views now, definitely the most anything long I’ve written has gotten. 3 of the 5 top images for “ChatGPT and environment” are from my blog. Neat!

This has been a really fun surprise. I’m mashing this button as long as I think I can actually add new useful stuff to the conversation and get positive reception. Older readers know that I used to blog about a much larger range of stuff. I want to get back to that. I’ve been pretty busy recently and in choosing what to write about, it does still seem like there are some big gaps in the AI & environment debate I’d like to focus on addressing. But there are a limited number, and once I have more time I want to broaden what I post about again. This is temporary.

More people should be doing this!

I’d really like more popular writing on AI and the environment from people who can go beyond “Oh AI’s weird and new, and is using a lot of energy and water. Must be bad, end of story.” There’s a lot of great technical writing on AI and the environment, but things aimed at regular people are still shockingly scarce. If you do a YouTube or TikTok search, most of what you see is pretty misinformed. If you have skills in either, that might be a niche you can fill.

I presume most journalists are honest, but also might be stretched thin or in a topic they don’t know much about

I don’t want to come off as implying that there’s a vast journalistic conspiracy against data centers. I’ve been roasting a lot of recent coverage, but I think the problems I’m identifying mostly come from journalists being somewhat new to the issue, and running with previously established common wisdom about data centers, like the idea that they use a lot of water. Less charitably, it might be that big weird new buildings using a lot of power and water just gets clicks, but I presume almost everyone covering this is authentically interested in the truth and is just slipping up because the public understanding is bad.

There are exceptions, but I really don’t want to come off as implying that journalists as a whole are actively choosing to be deceptive about this. I think they’re sometimes just taking shortcuts based on pre-existing coverage.

What would cause me to think that AI is actually bad for the environment

I would change my mind about AI and the environment if I saw good reasons to believe 1 of these 4 points:

Using AI raises individual people’s carbon or water footprint in expectation.

The numbers are so tiny that if people switch from using AI to most other activities their emissions probably go up. Right now the numbers imply that if AI is using a large amount of your energy and water, you’re using it so much that you’re not doing other activities that are much worse for the environment.

AI data centers are using an inordinate amount of power and water relative to the actual number of people using them (hundreds of millions), the tax revenue they generate for the communities they’re built in, or other major American industries.

Problems specific AI data centers have created for local communities (xAI’s data center in Memphis might have added significant pollution) couldn’t be easily fixed with strict regulation and pricing.

AI isn’t actually valuable at all, or at least isn’t valuable per unit of energy and water it costs. This is the place I’m least likely to change my mind. I use chatbots hourly at least. They’re a big reason I’ve been able to learn so much about this so fast in the first place.

Something I’m not willing to entertain is the point that AI is bad for the environment because it emits at all, or uses any water. Under that definition, literally everything we do is bad for the environment, and saying “AI is bad for the environment” is identical to “AI is something people do.” I have a more detailed breakdown of the definition of “bad for the environment” I’m using here.

Like you, I really want to stay positive on journalists' fundamental honesty. But at some level of carelessness and clickbaiting, it barely matters anymore.

Here's CNN's latest headline: "Sora 2 and ChatGPT are consuming so much power that OpenAI just did another 10 gigawatt deal".

So OpenAI signed a deal with Broadcom to deliver 10 GW of compute capacity (not electricity) over many years. CNN then suggestively reframes this as OpenAI basically needing access to additional power plants _right now_ just to keep the lights on for Sora 2.

Sora is a free app, with no direct revenue stream, and only 1/800th of the users of ChatGPT... and according to the headline, is being subsidized to the tune of 10 nuclear power plants. That sounds very plausible, indeed.

FWIW, Reuters/AP/Bloomberg reported correctly and factually.

Your articles are extremely helpful. I have been going all in trying to learn AI and how I can leverage it to create a new career and direction in my life. However my 20 something year old children are constantly berating me using it and exacerbated at how I am so willing to “destroy the environment.” Your articles have really helped me see how media shaped the narrative in many dishonest ways and not just the environment. It’s helped me have thoughtful discussions and talk about critical thinking with my family. It’s also cleared my conscience to move forward with the dreams and ideas I have about AI