An example of what I consider a misleading article about AI and the environment

Just show the numbers!

The Verge recently published an article with this title:

“Google says a typical AI text prompt only uses 5 drops of water — experts say that’s misleading”

Stop and think about what this headline conjures up for you. For me, it sounds like Google is being shifty with numbers to present its AI model as not using much water, and in reality it’s using enough water to at least consider as a possible problem.

A lot of people are under the impression that prompting chatbots adds a significant amount of water to their personal environmental footprint. This title would vaguely reaffirm that belief.

The article’s paywalled, so I’ll quote from it sparingly:

Google estimates that a median Gemini text prompt uses up about five drops of water, or 0.26 milliliters, and about as much electricity as watching TV for less than nine seconds, roughly 0.24 watt-hours (Wh)

This is from Google’s latest study, the most comprehensive report we’ve received on individual impacts of individual AI prompts on the environment.

But Google also left out key data points in its study, leading to an incomplete understanding of Gemini’s environmental impact, experts tell The Verge.

“They’re just hiding the critical information,” says Shaolei Ren, an associate professor of electrical and computer engineering at the University of California, Riverside. “This really spreads the wrong message to the world.” Ren has studied the water consumption and air pollution associated with AI, and is one of the authors of a paper Google mentions in its Gemini study.

Okay, this sounds bad. “They’re hiding the information” makes it sound like Google is actively choosing to withhold important information about how much water they’re really using. It’s not just a passive mistake, and this accusation is being shared by someone who seems to have expertise on AI and the environment.

When it comes to calculating water consumption, Google says its finding of .26ml of water per text prompt is “orders of magnitude less than previous estimates” that reached as high as 50ml in Ren’s research. That’s a misleading comparison, Ren contends, again because the paper Ren co-authored takes into account a data center’s total direct and indirect water consumption.

The article concludes:

If you look at the total numbers that Google is posting, it’s actually really bad,” de Vries-Gao says. When it comes to the estimates it released today on Gemini, “this is not telling the complete story.”

Nowhere this article is an actual number shared for how much water Gemini is using once you consider the offsite water. Google is “hiding the true water cost,” but so is the reporter.

Consider what your takeaways from this article would be. For me, I would think “Gemini’s water use is significant” and “Google is lying about how much water Gemini is really using. It must be bad!” There was a reference to estimates “going as high as 50 mL” so maybe it’s actually around there. That’s not 5 drops, that’s 1000!

Let’s see what happens when we look at the numbers.

The numbers

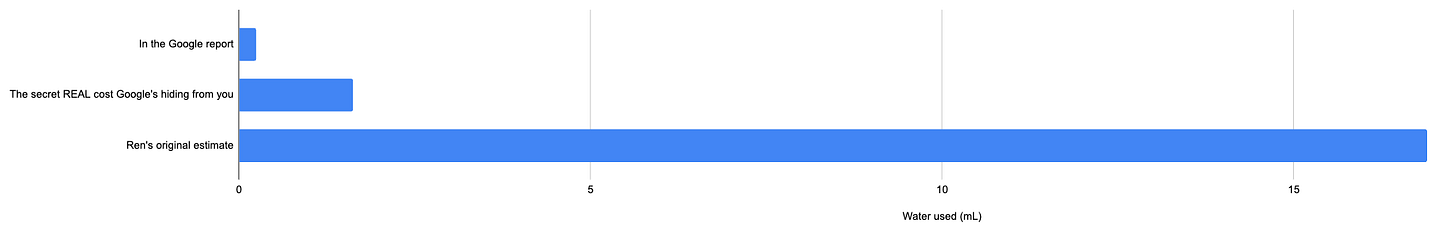

Calculating “offsite” water use for the power in data centers is easy. This is the water used in nearby power plants to generate electricity. Lawrence Berkeley National Laboratory’s comprehensive report on data centers finds that the power plants American data centers draw energy from use an average of 4.52 liters of water per kWh of energy generated. The Google paper found that their model uses ~0.3 Wh per prompt, and included the full energy cost in the data center per prompt. 0.3 Wh = 0.0003 kWh. So if the power plant uses 4.52 L of water per kWh, and the data center used 0.0003 kWh per prompt, then the power plant is using (4.52 L / kWh) x (0.0003 kWh) = 0.0014 L = 1.36 mL of water per prompt.

Adding this to Google’s original estimate for the water used in the data center itself, we get 1.62 mL of water per prompt.

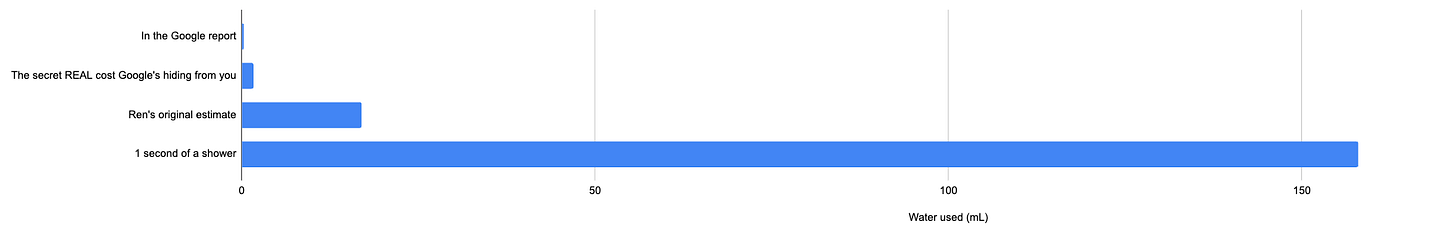

So instead of using 5 drops of water, it’s using about 32.

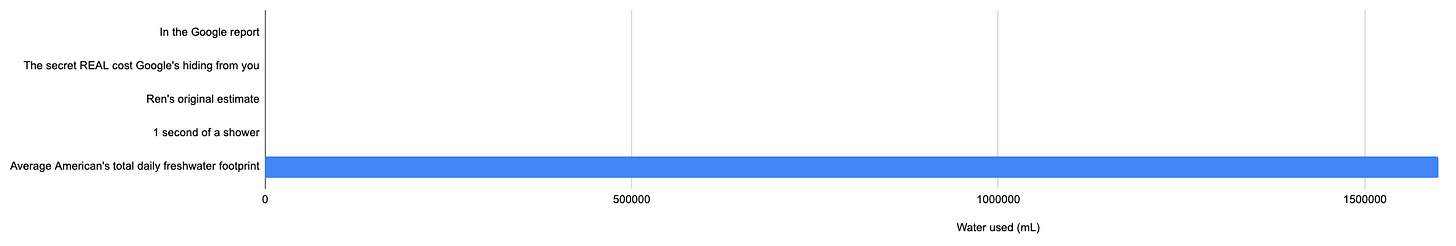

Putting this number in context, the average American’s lifestyle uses 1600 liters of water per day when you include our food production, energy generation, and at home use (this consumptive use of specifically surface and groundwater, or “blue water”, where the water is not returned to its original source).

This means that Google’s original claim was that each Gemini prompt uses 0.000015% of your daily water footprint, and the correct value (that Google is quote “hiding” from you) is 0.00010%. Instead of 1 in 6.7 million of your daily water use, a Gemini prompt is actually as much as 1 in 1 million. Google originally misled you by announcing that it takes 650 Gemini prompt’s worth of water for 1 second of your shower. In reality, it only takes 97.

Do you think the average reader would leave the article with an accurate understanding of the magnitudes here? Is it fair to say Google is “Hiding” this information?

Not reporting offsite water doesn’t seem inherently deceptive, as long as you make it clear that you’re only reporting onsite water

I can see the argument for both reporting and not reporting offsite water, but I don’t think it’s especially deceptive to only include onsite water.

Obviously people are right to ask for the full water impacts of data centers on the locations they are built, so it makes sense to include them in the total water cost, and in most AI environmental reporting it’s become standard and expected. But Google is very clear throughout its paper that it’s only counting the water in the data centers itself. They define in their report:

Water consumption / prompt: the water consumed for cooling machines and associated infrastructure in data centers

It would seem deceptive if they were dodgy about what specific water they were measuring, but they give this very clear definition up front in their paper of exactly what they’re measuring. This does not seem deceptive.

Ren is correct to note that Google misleadingly compares their onsite water measurements to his total onsite + offsite measurements, and over-reports Ren’s original findings (they imply Ren’s maximum estimates were actually his averages). This is the place where Google did seem to behave somewhat deceptively, but to be clear, Ren’s original paper found that the US average data center’s water used per prompt was 16.9 mL. If we include both the offsite and onsite water, Gemini’s actual water cost is 1/10th of this value. While Google should have phrased this better, it’s still true that this is drastically lower than previous estimates.

Let’s reread one section of the article:

When it comes to calculating water consumption, Google says its finding of .26ml of water per text prompt is “orders of magnitude less than previous estimates” that reached as high as 50ml in Ren’s research. That’s a misleading comparison, Ren contends, again because the paper Ren co-authored takes into account a data center’s total direct and indirect water consumption.

The complaint here, that I think should have been spelled out in full, goes like this:

“Google incorrectly compares their new measurement of 0.24 mL of water per prompt to Ren’s original estimate of 16.9 mL, and they’re using that bad comparison to say it’s much smaller. In reality, each prompt uses 1.62 mL of water.”

To be clear, Ren has other complaints about misreadings of the data that are separate from the specific point I’m making here. I don’t want to misrepresent him, so you can read them in full here and here.

Why do this?

The title of this article could have been “Google says a typical AI text prompt only uses 5 drops of water — experts say that’s misleading… it’s 32 drops!” That’s obviously the fault of the person writing the headline, not the author.

It makes sense to write an article about how Google reported a number in a way that made it look lower than what industry standards require. It makes sense to focus on other specific issues with the paper.

It does not make sense to fill an article with talk about the “hidden offsite water cost” of the model without just telling your readers what that very publicly accessible water cost is somewhere in the article. It also does not make sense to imply that Google is “hiding” this when their report is very up front about only considering onsite water.

Someone could have bothered to just do the incredibly simple math to show what the numbers being talked about are. This information is easily available. Ren himself cites the study where I got the 4.52 L/kWh statistic. Everyone involved knows how to find this.

It definitely doesn’t make sense to leave readers hanging with the sense that their AI prompts use significant amounts of water. Everyone involved surely knows that a lot of people are under the mistaken belief that their personal AI use significantly adds to their daily water footprint.

I don’t want to single out the specific reporter, because this tendency to not give the simple small numbers involved in individual AI prompts is widespread across reporting on the topic. I do want to note that this reporter is not some rando who wouldn’t have known to include this number. Their bio says they are “a senior science reporter covering energy and the environment with more than a decade of experience.” I don’t understand why someone with their level of knowledge wouldn’t think “My whole article is about how Google is ominously leaving out the full water cost of AI. Let me just show my readers what that water cost is, using the incredibly easy method for calculating it.”

This leaves us with a misleading headline and article. Readers are left with the sense that Google’s actively lying to them about the true water costs of their models, and the unstated but seemingly obvious next step is to assume that the water costs are significant. In reality, Google’s paper makes it clear that they’re measuring only the water in data centers themselves. Including the offsite and onsite water means each Gemini prompt uses 1/38,000th of the water cost of a single beef patty. I think readers would come away from this article with a wildly different and wrong idea of how much water is involved.

Address misconceptions with me

We need a lot more people publicly calling out articles like this that leave readers with a sense that AI companies are using significant amounts of energy and water per prompt. Many many environmentalists have been tricked into thinking individual AI use is significantly bad for the environment, and are wasting a lot of time and energy encouraging others to boycott AI apps. This is a ridiculous waste of time for the climate movement, and it has been caused in part by reporters not sharing the simple numbers involved.

Calling this out is easy and just takes some simple math. If you ever see anyone make the complaint that reported water use isn’t using the offsite water, just use the fact that the average power plant providing energy for a date center uses 4.52 L of water per kWh (you can find it on page 57 here). Here’s a great summary of the Google report. You should consider writing about it publicly and posting it where other people can see it. I’ll have a much longer post soon on more ways to address this stuff.

Thanks for writing this. In addition check out this article.

“My overall takeaway is that you need to be careful when talking about water use: it’s very easy to take figures out of context, or make misleading comparisons. Very often I see alarmist discussions about water use that don’t take into account the distinction between consumptive and non-consumptive uses. Billions of gallons of water a day are “used” by thermal power plants, but the water is quickly returned to where it came from, so this use isn’t reducing the supply of available fresh water in any meaningful sense. Similarly, the millions of gallons of water a day a large data center can use sounds like a lot when compared to the hundreds of gallons a typical home uses, but compared to other large-scale industrial or agricultural uses of water, it's a mere drop in the bucket.”

https://www.construction-physics.com/p/how-does-the-us-use-water

The article also quotes Alex de Vries-Gao, who has apparently become the go-to source for these kinds of articles. He is not an expert on either AI or IT in general, and he was last in the news with this article that was repeated everywhere:

https://vu.nl/en/news/2025/ai-rapidly-on-its-way-to-becoming-the-largest-energy-consumer

There is so much wrong with this study, I don't even know where to begin. It essentially assumes that every GPU manufactured is used exclusively for AI training (no consumer use, no non-AI data center use, no inference or inference-specific hardware) and runs at its theoretical max TDP forever. It conflates manufacturing energy with operational energy instead of amortizing it over the hardware's lifespan. It confuses instantaneous supply bottlenecks with long-term deployment.

I did a somewhat naive calculation to correct for this and found that the study overstates AI power consumption for 2025 by about 3x, and by at least 2x. Everyone in AI scoffed at it, but its narrative wasn't countered in major publications, and it passes for expert wisdom now.