Jevons’ Paradox

A lot of people in debates about AI and the environment are talking about Jevons’ Paradox: Making something more energy efficient sometimes causes it to use more energy in total.

The way this works is simple. Making something more efficient often makes it cheaper (energy costs money), so more people use it. If it becomes popular and enough people use it, it adds up to consuming more total energy than if it stayed inefficient and unpopular. Technically, Jevons’ Paradox applies to any resource, not just energy and emissions, but we’ll stick to climate-relevant stuff for now.

Jevons’ Paradox doesn’t always happen. There are plenty of cases where making things more efficient was good for the climate and didn’t lead to more emissions. It’s just a dynamic we need to watch out for.

An example

Imagine all cars are super inefficient, and only get 1 mile per gallon. The gas to power them would be too expensive for the average person. Maybe a few rich people would use them, but that’s it.

Someone concerned about the environment says “Wow, those cars rich people drive are burning so much gas. If I make them more efficient, that will be good for the environment!”

This person finds a way to optimize cars to raise their efficiency from 1 mile per gallon to 30 miles per gallon. They sit back and think “I’ve done such a great thing for the climate, so much gas will be saved. So much emissions prevented.”

Now that the cars get so many miles per gallon, everyone can afford them. Most people buy cars, and the total emissions of all cars skyrockets, becoming one of the main sources of all greenhouse gases on Earth.

Oops.

Jevons’ Paradox and AI

There’s growing worry that AI is facing the same issue. As labs make their models more energy efficient, they’re able to offer them for less money in more places. This means that overall, AI is getting used so much more that the efficiency gains are causing it to use more energy (and emitting more) in total.

A lot of conversations frame Jevons’ Paradox as a negative. Here’s an example from a recent article:

Google claims to have significantly improved the energy efficiency of a Gemini text prompt between May 2024 and May 2025, achieving a 33x reduction in electricity consumption per prompt. The company says that the carbon footprint of a median prompt fell by 44x over the same time period. Those gains also explain why Google’s estimates are far lower now than studies from previous years.

Zoom out, however, and the real picture is more grim. Efficiency gains can still lead to more pollution and more resources being used overall — an unfortunate phenomenon known as Jevons paradox. Google’s so-called “ambitions-based carbon emissions” grew 11 percent last year and 51 percent since 2019 as the company continues to aggressively pursue AI, according to its latest sustainability report.

That sounds bad. Here’s an even more extreme take, from a blog post that’s been popular recently: Why Saying “AI Uses the Energy of Nine Seconds of Television” is Like Spraying Dispersant Over an Oil Slick

TLDR: the smaller the number, the more you should be worried

Claims about the energy footprint of AI are suddenly everywhere. “AI search summaries use 10 times more energy than a traditional search”. “Each chatbot session consumes 500 liters of water.” Recently, Google issued its own report, full of long awaited technical detail that specialists are poring over, but the headlines were simpler: “Each prompt of Gemini uses as much energy as watching nine seconds of television.”

…

Now imagine that I made money on each prompt (or persuaded investors that I did, even though I don’t). That gives me every reason to get better at building the infrastructure to serve all of those prompts. I add data centers, each more efficient than the last. With each new round of building, the wattage of my data centers goes up and up. The racks get denser and denser, and I need more and more of them, all over the world. $600 billion worth of them, as a down payment.

In other words, the only way my per-prompt energy cost goes down is if my overall energy footprint goes up.

This is the counterintuitive reality. The lower the energy used per prompt, the more concerned you should be.

This isn’t exactly Jevons’ Paradox (the causality is flipped), but it implies a similar dynamic. The author doesn’t mention Jevons’ Paradox by name, but I assume they’d agree it’s relevant here. The piece isn’t just about Jevons’ Paradox, it’s also about how society’s general digital infrastructure is wasteful and uses a lot of energy. To put it mildly, I disagree, but that’s a separate question.

I think there’s a lot of good-faith concern about Jevons’ Paradox, but I don’t think this level of concern makes sense when you think about what’s actually happening.

A lot of framings I see of Jevons’ Paradox are actually pretty terrible, and don’t get across the subtleties of the idea. Many conversations about AI and the environment include a very simplistic and limited understanding of the trade-offs involved here.

Let’s look at those trade-offs.

Jevons’ Paradox in an industry is only bad if it leads to more total global emissions, not just more emissions in the specific industry

Solar panels

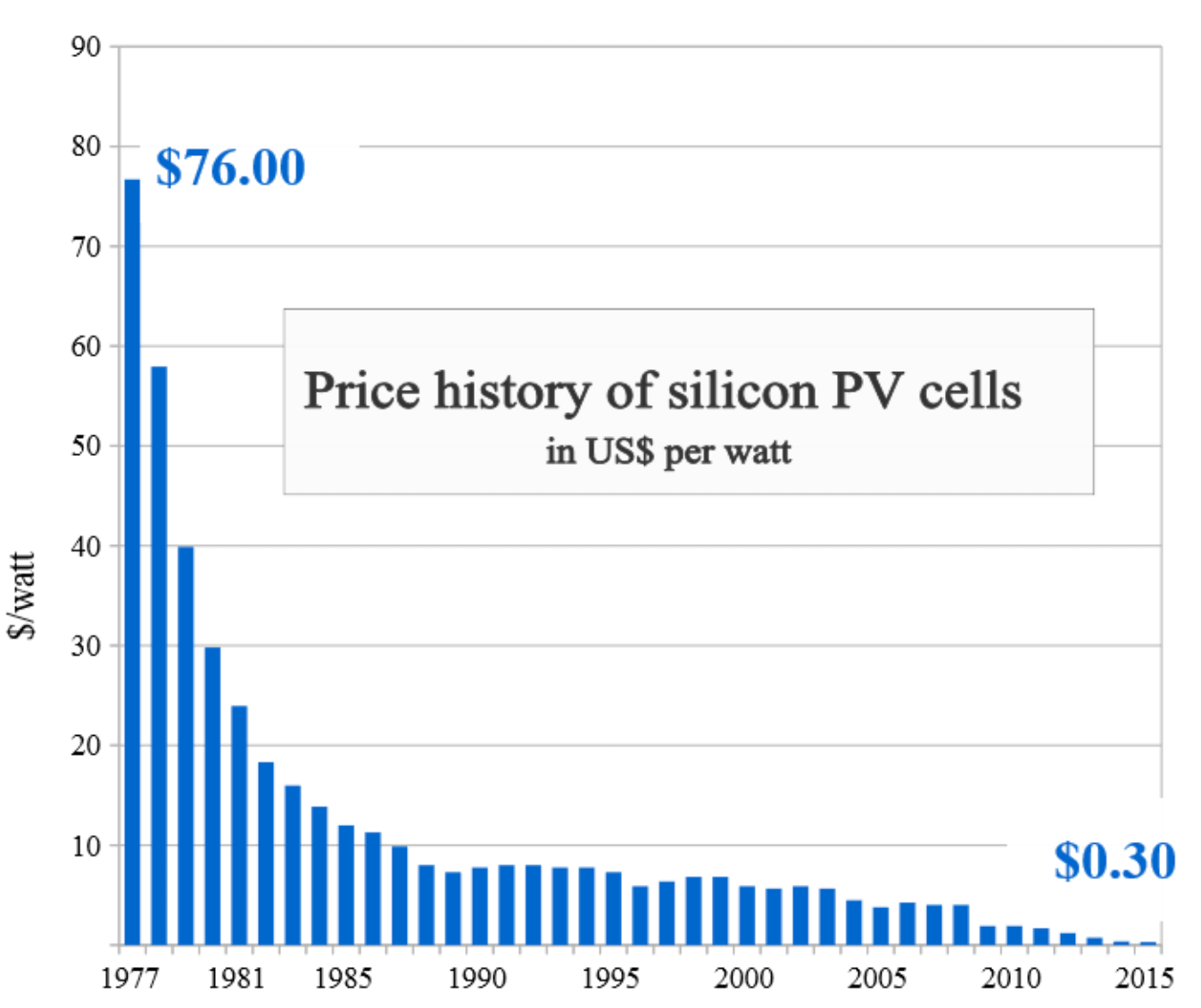

The cost of building new solar panels has been rapidly falling since the 70s.

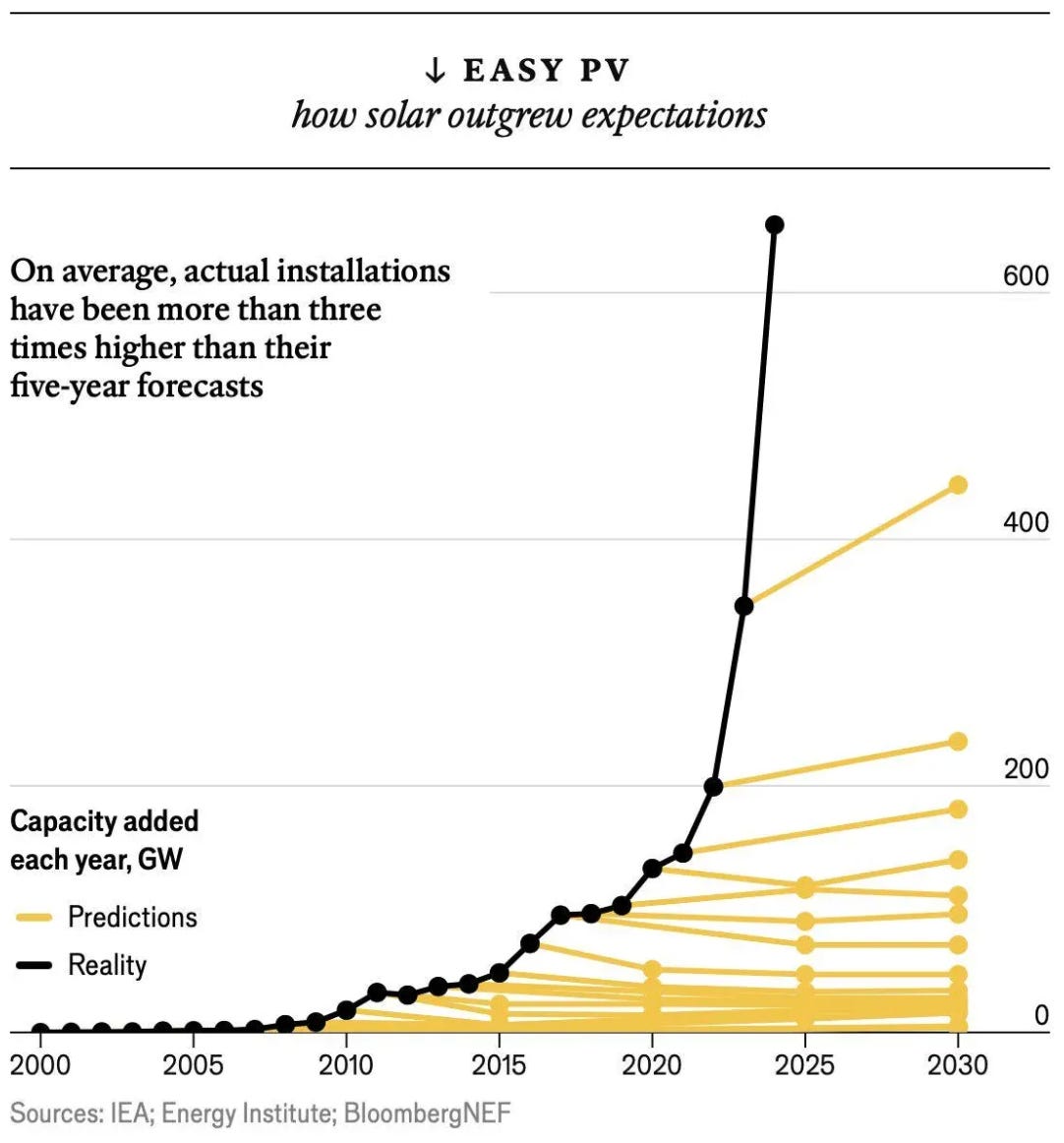

As a result, solar power has exploded way faster than energy experts predicted.

There are a lot of reasons for this, but one is that solar panels have become more efficient and less resource-intensive to produce (using less energy per panel to produce), so they’ve become cheaper, and as a result way more people are buying them (they use way more resources and energy in total). This has been a wonderful surprise for those of us who have been following climate for a while. In the 2000s, it seemed unreasonable to predict such rapid growth.

The emissions we spend on producing solar panels are completely cancelled out by the emissions the solar panels prevent, and the panels go on to prevent way more. Exact estimates vary a lot, but let’s say they save 10x the carbon creating them emits.

The climate only responds to total emissions. If making a solar panel adds 1 unit of carbon, and using it subtracts 10, the solar panel is exactly equivalent to something that adds 0 units of carbon and subtracts 9. We should want to make more solar panels for the sake of the climate for the same reason you should want to keep making trades where you give someone $1 and they give you $10. You’d really want to max out those trades. Your bank account doesn’t care about the individual $1 bills you lost, it just responds to the net total at the end, which will be $9x(the number of trades). The climate doesn’t respond to the fact that solar panels emit 1 unit of carbon, it only really responds to the fact that solar panels on net remove 9 units of carbon from the atmosphere. We should mash the “build solar panel” button a lot, just like we should mash the "trade $1 for $10” button.

Imagine that you went back in time to 1980 and showed someone this table comparing solar panel dollar and carbon costs, and the total emissions of all solar panel construction:

Their reaction is:

Oh no! This is terrible. Solar panels have fallen victim to Jevons’ Paradox. Making them cheaper has only made their total emissions explode. We should work hard to keep them expensive and inefficient. We need to stop this from happening!

How would you respond? What would you tell them?

I would tell them this:

Jevons’ Paradox is only bad for the climate if the product will, on net, increase total CO2 emissions, not just the emissions of the product itself. Jevons’ Paradox is actually good news for the climate if the product being sold will, on net, prevent more emissions than the carbon cost of creating and using it.

It’s good that solar panels were affected by Jevons’ Paradox. In some sense, it’s a good sign that they’re emitting more now, for the same reason that it would be a good sign if you spent a lot more $1 bills in the trades that get you $10 each time. If you found out that Jevons’ Paradox had occurred, and now every $10 trade only cost $0.50, and as a result even more trades were happening, that would be a good situation for you.

Obviously, in an ideal world, we’d want constructing the panels to emit less, just like you’d rather pay $0.50 for each $10, but the fact that panels are emitting so much more in total is, if anything, a sign that way, way more emissions are being prevented in total as well. Jevons’ dynamic in solar panels is a way to get society to make way more +1 -10 carbon tradeoffs.

There are industries where Jevons’ Paradox has been very bad for the climate. The most obvious is cars. Cars becoming more affordable and energy efficient has been uniquely bad for the climate, because cars emit a lot and don’t prevent as much emissions elsewhere.

These examples show that it’s only bad for the climate if an industry experiencing the Jevons’ Paradox dynamic is expected to, on net, cause more total emissions than it prevents. If it’s expected to prevent more emissions than it causes (especially if it’s expected to prevent more and more as it grows, like solar panels do) then it’s neutral or even good that the Jevons’ dynamic is happening.

AI

At first, it might seem crazy to compare AI to solar panels. Solar panels are a miraculous climate technology. AI is a very broad general tool that’s emitting a lot.

I’m not asking you to think of AI as miraculous climate tech. All I’m asking is to consider the question “Will AI, on net, prevent more emissions than it causes?”

What experts predict

It is really really really hard to predict what is going to happen with AI. Expert predictions are useful here, but we should take them with a grain of salt. Here’s a compilation of expert predictions. I’m trying to paint a complete picture here. If you think I’m leaving any important predictions out from generally trustworthy experts, let me know and I’ll add them:

2025

IEA: Energy & AI: If widely adopted, existing AI applications could cut emissions by ~5% of energy‑related CO₂ in 2035; warns that rebound (e.g., shifts to autonomous vehicles) could undercut benefits. IEA’s “widespread adoption” case shows use‑phase savings that exceed data center emissions in 2035, but that outcome is not guaranteed. The IEA separately predicted that data centers’ total greenhouse gas savings in 2030 will be 4x-10x as much as much as AI’s total emissions in data centers.

Grantham Research Institute & Systemiq: Green and intelligent: the role of AI in the climate transition: With effective application in power, transport, and food consumption, AI could reduce 3.2–5.4 GtCO₂e/year by 2035 (≈ single‑digit % of global GHGs), and authors argue these savings would outweigh AI‑driven power demand.

2024

Nature Communications: Potential of artificial intelligence in reducing energy and carbon emissions of commercial buildings at scale: AI adoption in U.S. commercial buildings could cut energy use and CO₂ by ~8–19% by 2050. Energy in commercial buildings is responsible for ~26% of global emissions, so this one AI application alone would reduce emissions by at least 2%. Globally, this alone would more than cancel out all data center emissions.

2022:

IPCC: AR6 WGIII: Digitalization (incl. AI) can enable mitigation but may increase energy demand and wipe out efficiency gains without proper governance; highlights rebound effects as a material risk. (No single net number.)

2021:

Brockway et al: Energy efficiency and economy-wide rebound effects: A review of the evidence and its implications: Median economy‑wide rebound ≈50–60% of expected energy savings (meaning on net AI will still save significantly more energy); in some cases ≥100% (i.e., savings erased).

A general pattern emerges where it seems like in expectation, reports are either finding that AI will net out to 0 or be pretty net positive for the environment. The IEA separately wrote that they expect the emissions prevented by AI to be 3-4x higher than all global data center emissions (including all data centers that support the internet).

I lean in the direction that it seems likely that AI will, on net, reduce emissions rather than increase them, and the more it’s used and deployed the more net emissions it’s likely to reduce. In this scenario, the Jevons’ dynamic with AI is leading us to a world where AI reduces total emissions more on net, so we should be cautiously optimistic about the fact that the Jevons’ dynamic is happening, in the same way we’re optimistic about the Jevons’ dynamic with solar panels (though with AI we have much more uncertainty).

Why would AI reduce emissions?

Most of AI’s effects on emissions will probably happen in the way AI’s used, not in the data centers that run it.

Consider Amazon. There’s an ongoing debate about Amazon’s net effects on climate change. Amazon optimizes package deliveries, but maybe causes people to consume more in total, and there’s a debate about how the emissions of the supply chains Amazon uses compare to emissions of other things we buy.

But no one in the environmental debate about Amazon has said “The most important question is how much energy its website is using in data centers.”

That’s because the energy data centers use is tiny relative to the website’s effects on economic and personal behavior.

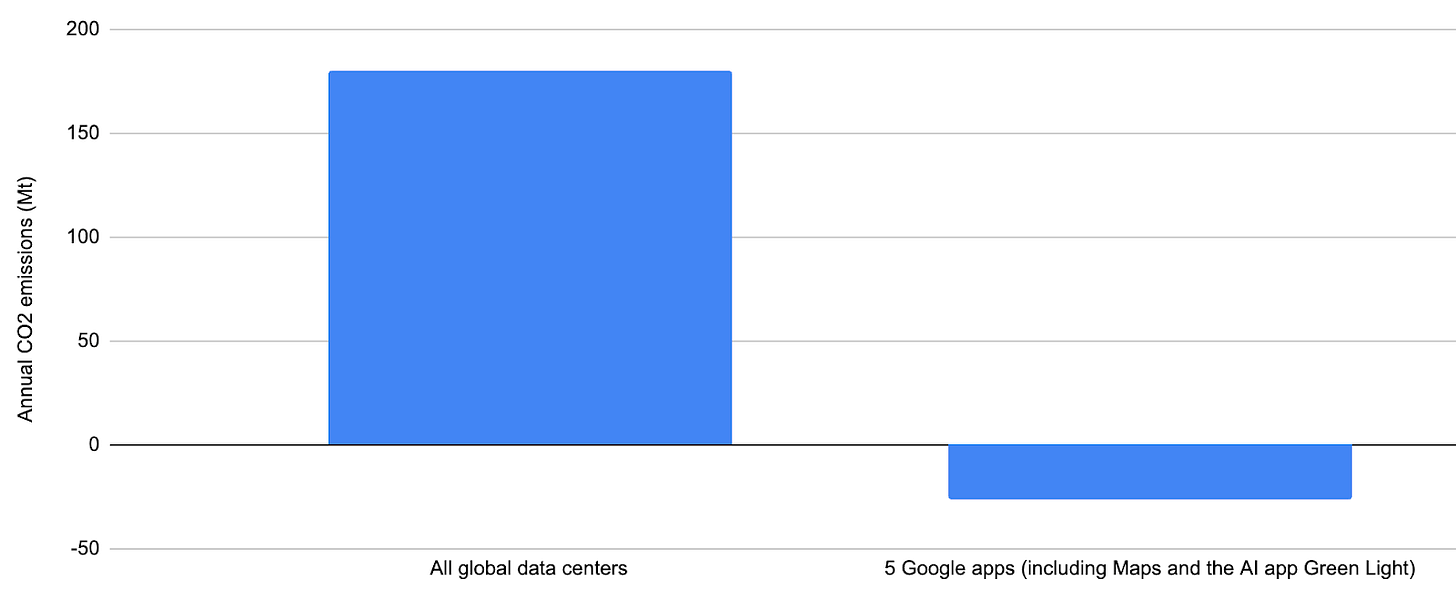

Another example of a way the internet has an outsized effect on the physical world is Google Maps. Maps uses hardly any energy in data centers, and has optimized billions of car trips to be as physically short as possible. It seems certain that the emissions Maps has saved completely dwarf the emissions it’s caused by using energy in data centers. Google reported that just five of its apps (including maps) prevented ~26 million tonnes CO2e in 2024. All global data centers (AI + the internet) combined emitted 180 Mt CO2 in 2024, so 5 Google apps alone might have prevented 14% of all current data center emissions.

This shouldn’t surprise us. Cars emit a lot and aren’t especially optimized. Finding ways to optimize driving (which both the internet and AI are useful for) should yield a lot of low-hanging fruit for reducing emissions.

AI is obviously using way, way more energy in data centers than Amazon or Maps, but as I’ve written about before, this is mainly a result of AI being used so much, not each individual prompt using a significant amount of energy. AI has the potential to have large effects on the physical world per unit energy it uses in data centers. The more energy efficient each prompt is, the more potential there is for the effects on the physical world to be much greater than the energy costs in data centers.

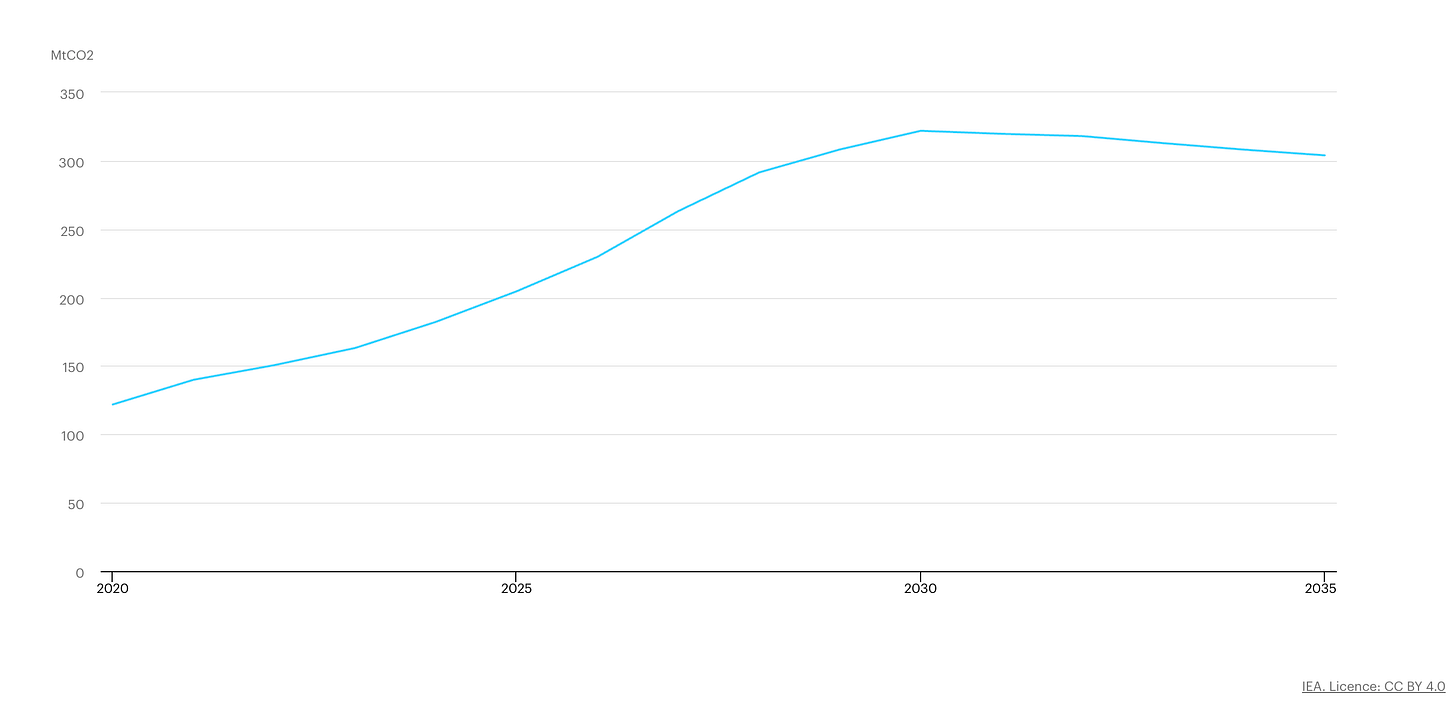

The IEA expects data center emissions to peak in 2030, at about 320 Mt CO2 that year.

Data center energy use will continue to grow after, but will be balanced out by much greener energy sources, so emissions will (very slowly) fall.

For context, 320 Mt will (under their most aggressive path to net zero emissions) be ~1.3% of global emissions that year. Obviously not nothing. But what this means is that if all AI and all the global internet combined are optimizing processes in society enough to reduce global emissions by just 1.3%, they will completely pay for themselves, and anything after that will be net good for the climate. I think the internet is probably already doing this anyway, and AI will accelerate that to save even more emissions.

A lot of people’s exposure to deep learning is ChatGPT, which can often be goofy and give wrong answers, and is used to cheat or skip out on work. I think this is causing people to assume that deep learning more broadly also isn’t useful. This is wrong.

Deep learning more broadly seems incredibly useful, especially for climate. It’s useful wherever there are situations where we know roughly what we want computers to do or respond to, but we can’t give them step-by-step instructions for how to do that. It’s almost as if computers themselves have been invented a second time, this time to solve a huge new host of problems traditional computing could never approach.

Here are just a few fields where deep learning shows incredible promise to prevent emissions. These broad areas adopting deep learning each individually has the potential to reduce global annual emissions by 1%:

Materials science: The discovery of better materials to use in renewable energy tech like solar panels and batteries). Even small efficiency gains here have huge climate payoffs. I think materials science might be the main field critics of AI’s environmental impacts aren’t paying enough attention to.

Energy grid optimization. Smart grid technology (where the energy grid optimizes itself and moves and stores energy based on sudden changes in supply and demand) is going to be critical for building green energy, since many renewable sources (especially solar and wind) are very intermittent and benefit from a grid that can respond rapidly and intelligently to sudden changes in energy supplies. Deep learning has a lot of potential to benefit smart grid technology. AI can provide better weather forecasting smart grids can respond to.

Building energy use optimization: Energy used in buildings is responsible for 26% of all greenhouse gas emissions. Even slightly more optimal heating and cooling systems would help a lot.

Transit: deep learning. For flights, deep-learning guided contrail avoidance can significantly reduce plane emissions. Urban traffic signal optimization can reduce local air pollution for pedestrians as well as emissions.

The Jevons’ Paradox in what AI optimizes

You might have noticed that a lot of the above examples themselves involve making other industries more efficient, so they risk inducing the same Jevons’ dynamic there. This is far from guaranteed, but there are specific cases where AI reducing energy costs seem likely to create a Jevons’ dynamic for another industry. The most likely place for this to happen is autonomous vehicles. It seems likely that autonomous vehicles will be extremely optimized compared to most drivers. They’ll be able to constantly monitor traffic patterns and other AV behavior better than humans (not to mention that a human won’t need to spend time driving them). This could reduce costs so much that on net way more people drive (as well as people who couldn’t drive before, like kids). This seems likely to raise emissions.

The question is whether new demand from cheap goods AI provides will on net lead to higher emissions. As of writing, it seems like experts are unsure but lean in the direction that AI is more likely than not to reduce net emissions. A lot of this will depend on good policy and practices around AI use that environmentalists should push for. It seems like AI’s energy in data centers will be a small part of its climate impact.

My argument in a nutshell

Deep learning seems poised to prevent more emissions than it causes. To do this, it probably needs to be deployed widely across a lot of society (having a deep learning model optimizing all city traffic lights to minimize stops, as an example), which will require a lot of energy, but on net this energy will “pay for itself” in the same way solar panels “pay for themselves.” If the Jevons’ Paradox is happening to a product like this, that seems net good for the environment. The more AI takes up our energy grid, the more likely it is that way more emissions are being prevented elsewhere. This isn’t perfectly linear (obviously people use AI for a lot of goofy things) but it seems likely that a world where AI permeates the economy more and optimizes lots of complex problems (and uses more energy as a result) is also a world where on net more stupid inefficiencies are being ironed out, new discoveries in green energy tech happen faster, and more emissions are prevented in total. We should have a lot of uncertainty about this, but expert predictions seem to lean in the direction that AI will prevent more emissions than it causes, and this will scale with how much it’s used in specific areas. This already happened with the internet.

I wouldn’t put all my money on AI being net good for the environment, but I also wouldn’t automatically assume it’s bad that Jevons’ dynamic is happening here.

Thanks for the article. I'm wondering whether it makes sense to lump all AI together here though — the increase in power is mainly coming from GenAI, but the energy-saving benefits seem to be in other areas of Deep Learning. I can't really see how GenAI ends up as a net positive on the climate now (which ofc doesn't necesarilly mean it's worth it to stop using it).

It also seems like Jevons' paradox is very unlikely to apply to AI, at least in terms of power efficiency. Power is only ~10% of the cost of GPU compute, so as far as price signals go it's pretty minor. So Jevons' paradox would only apply to the extent that people are curtailing usage due to carbon footprint concerns, which I expect is a pretty small share of people

[10% ballpark is for training compute, and comes from Fig 5 of Epoch's paper here: https://arxiv.org/html/2405.21015v1]