A short summary of my argument that using ChatGPT isn't bad for the environment

To share with anyone still worried

A few months ago I had compiled my core argument that it’s completely, conclusively ridiculous to worry about the environmental impacts of your personal chatbot prompts into a short summary people could share with skeptical people, without them having to read all of my two super long posts. I added it to one of the massive long posts, but I realized it might have gotten buried there, so I’m posting it here to make it easier to share. If you’d like to go much deeper on any of the arguments, explore this post. If you don’t see me addressing an argument you think is important, see if I addressed it here. If your main concern is AI more broadly, I can’t address every last environmental objection to all AI products in one post. I’ve written about the topic a lot in general here.

In the past few months I’ve spoken to a lot of people facing objections to using chatbots, including a surprising number of people who want to buy chatbot access for their large organizations and have been shot down because of worry over the environmental impact of individual prompts. I think it’s crazy that this is still happening and want a much shorter post readers can send to people who are still misinformed about this. Here it is!

Using ChatGPT isn’t bad for the environment

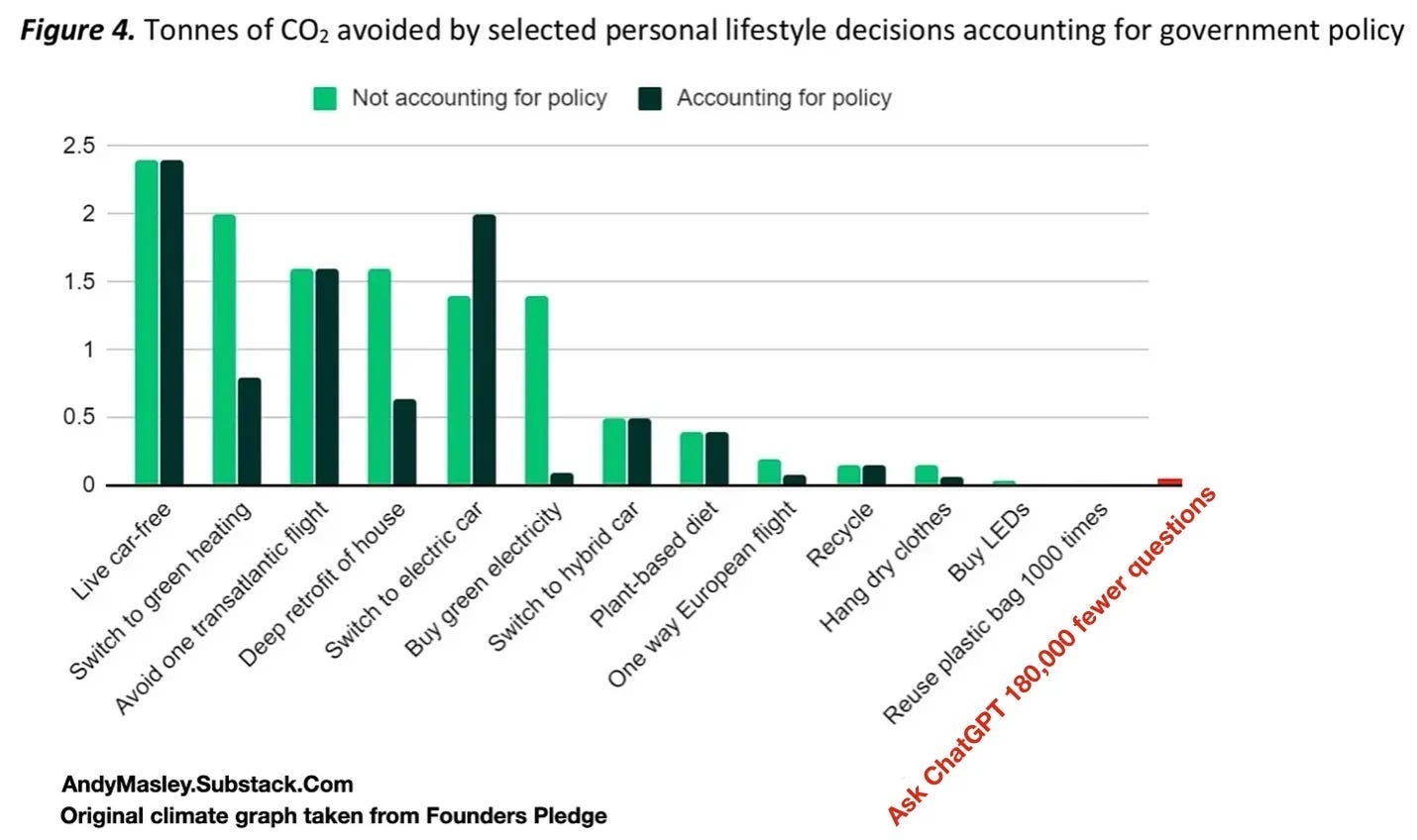

Using chatbots emits the same tiny amounts of CO2 as other normal things we do online, and way less than most offline things we do. Even when you include “hidden costs” like training, the emissions from making hardware, energy used in cooling, and AI chips idling between prompts, the carbon cost of an average chatbot prompt adds up to less than 1/150,000th of the average American’s daily emissions. Water is similar. Everything we do uses a lot of water. Most electricity is generated using water, and most of the way AI “uses” water is actually just in generating its electricity. The average American’s daily water footprint is ~800,000 times as much as the full cost of an AI prompt. The actual amount of water used per prompt in data centers themselves is vanishingly small.

Because chatbot prompts use so little energy and water, if you’re sitting and reading the full responses they generate, it’s very likely that you’re using way less energy and water than you otherwise would in your daily life. It takes ~1000 prompts to raise your emissions by 1%. If we assume each response is ~100 words, and you read at the speed an average American does, and writing the prompts and waiting for the response took you no time, it would take you 6 hours and 30 minutes to read all the responses. So you would use half your waking day on an app that in total caused 1% of your emissions. If you sat at your computer all day, sending and reading 1000 prompts in a row, you wouldn’t be doing more energy intensive things like driving, or using physical objects you own that wear out, need to be replaced, and cost emissions and water to make. Every second you spend walking outside wears out your sneakers just a little bit, to the point that they eventually need to be replaced. Sneakers cost water to make. My best guess is that every second of walking uses as much water in expectation as ~7 chatbot prompts. So sitting inside at your computer saves that water too. It seems like it’s near impossible to raise your personal emissions and water footprint at all using chatbots, because using all day on something that ends up causing 1% of your normal emissions is exactly like spending all day on an activity that costs only 1% of the money you normally spend.

There are no other situations, anywhere, where we worry about amounts of energy and water this small. I can’t find any other places where people have gotten worried about things they do that use such tiny amounts of energy. Chatbot energy and water use being a problem is a really bizarre meme that has taken hold, I think mostly because people are surprised that chatbots are being used by so many people that on net their total energy and water use is noticeable. Being “mindful” with your chatbot usage is kind of like filling a large pot of water to boil to make food, and before boiling it, taking a pipet and removing tiny drops of the water from the pot at a time to “only use the water you need” or stopping your shower a tenth of a second early for the sake of the climate. You do not need to be “mindful” with your chatbot usage for the same reason you don’t need to be “mindful” about those additional droplets of water you boil.

Some people think tiny parts of our emissions “add up” when a lot of people do them. They add up in an absolute sense, but they don’t add up to be a larger relative part of our overall emissions. If AI chatbots are just a 100,000th of your personal emissions, they are likely to be around a 100,000th of global emissions as well. We should mostly focus on systematic change over personal lifestyle changes, but if we do want to do personal lifestyle changes, we should prioritize cutting things that are actually significant parts of our personal emissions. That’s the only way we could reduce significant amounts of global emissions too.

The reason AI is rapidly using more energy is that AI is suddenly being used by more people, not that AI stands out as using a lot of energy per person using it. Personal chatbot usage is a tiny fraction of AI’s total energy energy and water footprint, it’s being used for way more. It’s like if the internet had been invented a second time and people were rapidly coming online.

The reason AI data centers use a lot of energy is that they are built to collect huge amounts of individually tiny computer tasks in a single physical place. This makes them more energy-efficient than other ways of doing the same things with computers. If we’re going to do things with computers, we should prefer that data centers manage a lot of it. Every time you interact with the internet, you’re using a data center in the same way you use any other computer. Globally, the average person uses the internet for 7 hours a day, but data centers only use 0.23% of the world’s energy. It’s a miracle of optimization that something we spend half our waking lives on can use less than a 200th of our energy. Computers in general have been ridiculously optimized to use as little energy as possible, so we should assume that the things we do on them will not be significant parts of our carbon footprints. It does not matter for the climate where emissions happen. If I’m right that individuals using chatbots are emitting way less than they would doing other things, then all the emissions caused by chatbots in data centers would have actually still happened, and there would have been a lot more of them, if people boycotted chatbots instead. So chatbots in data centers are often reducing emissions, they just concentrate the reduced emissions so we can see them all in one place. This makes them look bad, but they’re often preventing way more emissions that would just be more dispersed.

Data centers do put more strain on local grids than some other types of buildings, for the same reason a stadium puts more strain on a grid than a coffee shop: the stadium is serving way more people at once. Data centers are building-sized computers that tens of thousands of people are using at any one time. The reason they stand out is that they gather a large amount of aggregate energy demand into a tiny place, not that they’re using a lot of energy per user. In the equation (Total Energy) = (Energy per Prompt) x (Number of Prompts), energy per prompt is low, but the number of prompts in a data center is extremely high, so the total energy they use is high. This means that your personal use of AI is adding extremely tiny amounts of energy demand, and of all the things you can cut to reduce your emissions, it’s one of the very least promising. The fact that chatbots as a whole are using a lot of energy tells you nothing about whether you using it personally is wasteful, for the same reason that tens of billions of dollars are spent on candy bars globally, but you purchasing a candy bar isn’t financially wasteful. Deciding that you’re going to stop using AI for the sake of the climate is like going around your home and randomly unscrewing a single LED bulb, or pausing your microwave a few seconds early to save the planet. It’s so small that it’s a meaningless distraction.

The vast majority of AI’s effects on the environment will come from how it’s used, not from what happens in data centers. Amazon and Google Maps both have big impacts on the climate. Amazon might help or hurt a lot, and Google Maps optimizes a lot of car trips, but also might encourage more driving. But no one in debates about Amazon or Google’s climate impact says “The most important issue is the energy costs of running this website in data centers.” That would be crazy, because the websites are tools that cause people’s behavior to change, which leads to much larger changes in the physical world. If you’re concerned about AI’s impacts on the climate, the main question should be how using AI can help or hurt the climate, not the (tiny) costs of running AI in the first place.

This is an example of something I've seen elsewhere: a focus on a single item of human activity in terms of greenhouse gas emissions, rather than looking at the full sweep. Some will zero in on flying, for example, or driving.

I fear we'll see more of this as climate action becomes more politically difficult.

Despite the campaigning of the moral panic industry complex, these mass communications do provide benefit to the average outsider, Thanks.