A few people have accused me of intentionally distracting the AI/environment debate by narrowly focusing on chatbot emissions per prompt. There are a lot of other ways AI impacts emissions (the total number of prompts, how AI’s used to optimize processes or incentivize more emissions, the embodied costs of AI hardware etc.) that I haven’t touched on, and the emissions per prompt individualizes a problem that should be getting a collective response. Someone even accused me of being in kahoots1 with the AI labs.

I disagree for 2 reasons.

First, I’d written them hoping people would spend less time worrying about their individual lifestyles and more time thinking about how to actually systematically change our energy grid. I don’t want people to spend much time worried about their personal carbon footprints, and I definitely don’t want them to think that “does ChatGPT use much energy?” is the only relevant question in the AI/environment debate.

Second, the AI/environment issue is massive, and each individual question involves an incredible amount of information and nuance. Just making the case that using ChatGPT is not bad for the environment took me ~20,000 words. This is just one tiny variable in the huge debate. It’s not possible to write anything that both covers all relevant environmental questions about AI and goes into sufficient detail to do each full justice, unless it’s a full book. Saying that an article that doesn’t address every single environmental issue with AI is “meant to distract from the other harms” is basically saying we can’t do any specific deep dives on anything relevant to AI’s environmental impact at all.

I want to make it clear which parts of the debate I’ve tried to address so far, and which I haven’t. I also want to show why it’s so unreasonable to expect any one blog post to address every single issue (I’ll be attempting that soon regardless). I thought it’d be useful to map the debate.

Trying to map the debate

Emissions

Even just focusing on the climate impacts of AI can be difficult. I’m going to try to write out the main parts of the debate as different variables in a big equation for AI’s total harm to the climate.

A point I’ve been trying to get across is that the reason AI is using a lot of energy is that it’s being used a lot, not that each individual use burns a lot of energy.

We can write this as a simple equation:

My two big posts on individual chatbot prompts were exclusively focused on the (average energy/prompt) part of this equation. I haven’t posted anything about the total energy AI is using (~62 TWh in 2024, about 0.2% of global electricity), or the number of prompts and tasks all AI is handling. This post is a good overview of how rapidly AI’s taken off, it makes it clear that the total number of prompts is so large that even though the energy per prompt is small, the total energy used is high.

Of course, when thinking about the climate, we don’t actually care directly about the energy used. We care about the emissions. Total emissions will be equal to the average emissions per unit energy used by AI.

We can swap total energy used for the right side of the original equation to get:

Of course, the total emissions of AI aren’t only determined by the emissions per prompt. They’re also determined by the emissions caused by training the AI models, and the full embodied emissions of building and recycling AI chips. Each of these adds to the total emissions:

Already getting pretty messy…

The emissions caused by AI probably won’t mainly come from the energy they directly use in data centers. It’s likely that the vast majority of AI’s impact on emissions will come from how it’s used, not the impacts of the data centers themselves. AI is likely to both cause and prevent a huge amount of emissions. It could cause more emissions by making autonomous vehicles so readily available and cheap that the average person ends up driving much more. It could reduce emissions by advancing materials science that improves the efficiency of green tech (which would have way, way more impact on emissions than all data centers combined). So we need to add even more terms:

I’ve made the point before that we can’t just look at the total emissions of a process, we need to make some decisions about how useful or useless (or bad) it is. A children’s hospital emits way more than a Hummer, but I’d rather get rid of the Hummer than the children’s hospital. AI’s overall value is a thorny question. I’ve made some points about it here and here, but it seems hard to score and add to this equation. We could try to score it by adding a “value score” from 0 to 1. 0 is “AI is completely useless” and 1 is “AI is so useful that no amount of emissions we cause for it will be a problem.” We could then multiply the total emissions by (1 - (value score)) to get a sense of how bad AI is overall. This seems kind of silly, so I won’t write out the full equation. My point is that a lot of this debate is going to hinge on AI’s actual value as a service, not just its net emissions.

This equation is also limited in that it ignores how prompting AI incentivizes more AI use, or really any predictions about the future of AI, which are all over the place.

When you write all this out, it becomes clear that expecting every post about AI and the environment to address every single variable is ridiculous. There’s a crazy amount to say about each one.

Here are some relevant articles on each individual variable:

# of prompts: “AI is the most rapidly adopted technology in history.”

Average energy/prompt: Google’s recent report, Epoch, My posts here and here.

Emissions/energy used: MIT Technology Review’s coverage, where they find data centers use energy that’s 48% more carbon intensive than average.

Emissions from training models: I think everyone thinking about AI and the environment should make sure they understand the scaling laws AI labs are working under. Labs have a pretty strong incentive to make models larger and larger, because this consistently yields better and better performance. Here’s a report from Epoch on the gargantuan energy costs of training new models.

Embodied emissions of AI hardware: Here’s a recent report trying to estimate AI’s embodied emissions.

Emissions caused by how AI’s used: Jevons’ Paradox in general, and lots of specific examples like this: “A report published by the University of Michigan Center for Sustainable Systems highlighted that AV systems could increase vehicle primary energy use and GHG emissions by 3% to 20% due to more power consumption, weight, drag, and data transmission.”

Emissions prevented by how AI’s used: “Net zero needs AI — five actions to realize its promise” and “AI Could Be Harnessed to Cut More Emissions Than It Creates.”

Value we’re getting out of it: Here’s a very pro and very anti AI article. Here’s a very short and very long take from me. This debate alone is consuming so much of people’s reading time that it seems kind of hopeless to reach any significant agreement anytime soon.

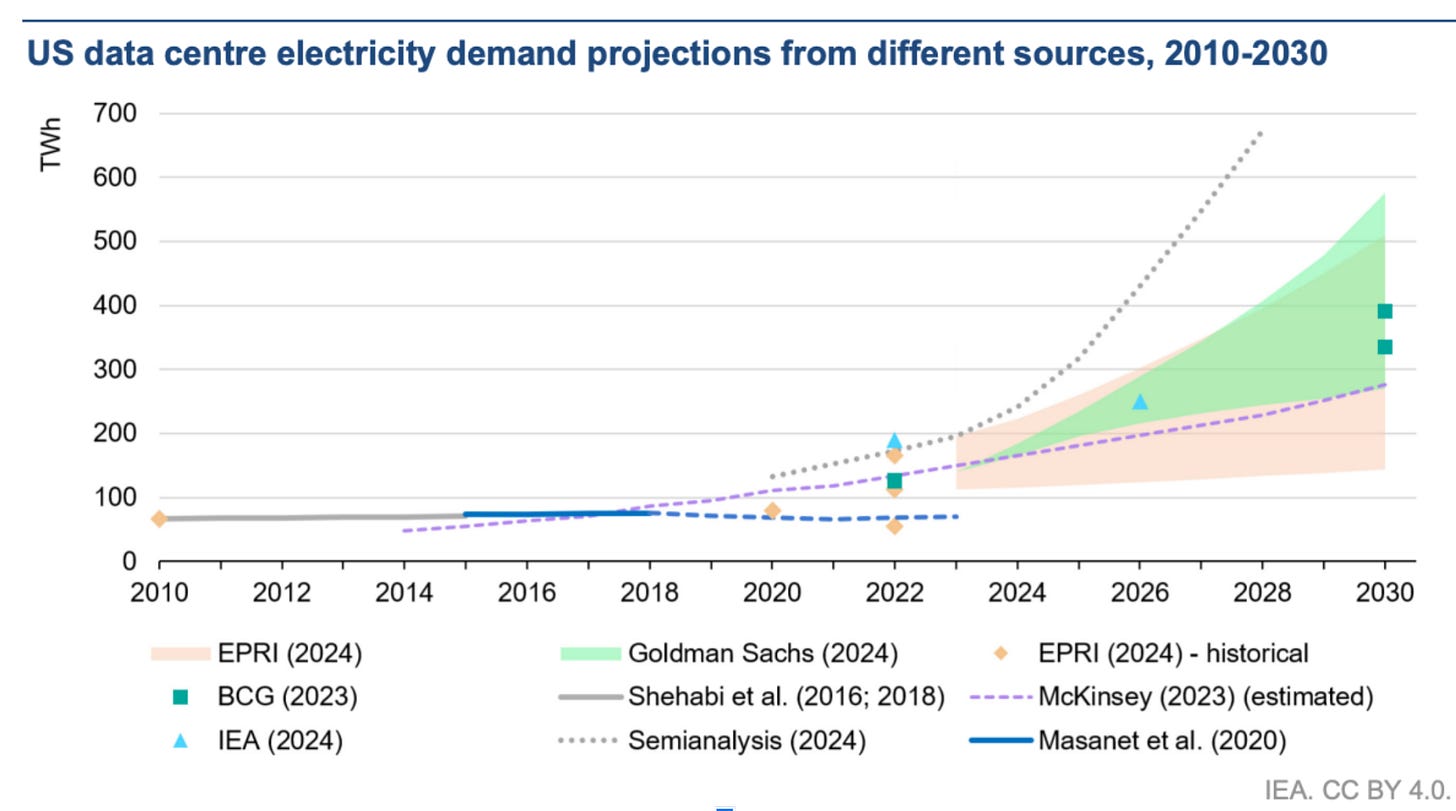

The future of AI’s energy use: Ridiculously hard to predict. The IEA has a decent report here.

Here are a ton of other questions about AI and the environment. I cannot possibly fit these all into a single equation:

Water

Local water prices

Local water ecology (which sometimes competes with local water prices. Phoenix residents who want maximally cheap water are not exactly friends of the local water ecology. Trade-offs need to be made between equity and local environments).

National and global access to freshwater

Water pollution

Ways AI can optimize water use

Water economics in general

Air pollution

How bad is air pollution from AI data centers?

How does it compare to other industries?

How can the ways AI is used prevent or cause air pollution?

Electricity costs

Rising electricity rates in different areas.

Household vs. commercial electricity rates.

How much should we focus on rationing the energy we have vs. building abundant green energy?

Mining materials

Mineral mining’s harm to local environments.

Geopolitics

Does AI increase or decrease the likelihood of great power war? Nuclear war would be bad for the environment…

Misc.

Ways local regions could use tax revenue from data centers for the environment

Will AI be an existential risk? The whole reason we work on environmental issues is for humanity and Earth to survive and do well. If there’s a decent likelihood AI itself could be an existential risk, this needs to be included in our calculations too.

Clarifying some of my beliefs on these questions

People have noticed that I’m not especially worried about AI’s overall environmental impacts, and some have inferred that this is creating a bias in my writing, or that I’m actively trying to present an incomplete picture to lure people into ignoring AI’s environmental harms. I want to clear up a few of my takes on these broader questions:

The idea that individual AI prompts are a significant part of the way you might harm the environment in your day to day life is straightforwardly wrong, and anyone telling you otherwise is either deeply confused or lying to you. This is not a place where reasonable disagreement is really possible.

I suspect that AI overall will on net be good rather than bad for the environment. This is a place I think reasonable people can radically disagree. I’m pretty unsure here. People much smarter and more knowledgeable than me have come to very different conclusions. A few reasons I suspect AI is probably on net going to be good for the environment:

AI is ultimately reliant on computers, and computing is basically the most resource-efficient thing we do as a society. Most physical activities humans do (flying, driving, shipping, farming, smelting steel) require orders of magnitude more energy per unit of output than running computations. The more AI uses our global energy budget, the more it’s likely to be optimizing lots of other processes that would have used significantly more energy without it. Consider Google Maps. It seems pretty likely to me that Maps has decreased people’s total time on the road by at least 1%. This saves way more emissions than Maps creates from the energy it uses in data centers. Computing gives us a lot of useful info for saving energy while spending very little energy to get that info.

The IEA expects all global data centers to reach peak emissions in the 2030s at around 320 million tonnes (Mt CO₂) per year. This is a lot, but to put this in context, it’s about 0.86% of global emissions right now. So if you believe that the entire internet, and entirety of AI use in data centers together, could by 2030 be optimizing science and society to the point that our net emissions are just 1% lower than they would be if the entire internet and all large AI tools together didn’t exist, all data centers will have “paid for themselves” and will be net good for the climate. The climate only responds to total emissions. If AI prevents more than it adds, it’s by definition good for the climate. Google already estimates that maps + an AI model that optimizes traffic in cities to reduce emissions prevented ~26 million tons of CO2e in 2024 alone, so AI and the internet might already be “paying for” 1/12th of their maximum ever global emissions just through a few specific Google apps. We shouldn’t be too surprised by this, because cars emit a ton, and haven’t really been optimized in the ways they could be. Think of all the red lights you’ve waited at with no cars coming, while your car just idles and spews out emissions, because the traffic light can’t detect that there’s no reason not to switch to green. AI and the internet emit very little relative to how often we use them, and there a ton of very simple ways to use them to optimize wasteful processes elsewhere.

Deep learning in general seems to be turning out to be a very powerful tool in a few key scientific areas, especially materials science. Despite the naysayers, deep learning just seems super useful for a lot of climate tech. Read more about that here.

This is not a fringe position. The International Energy Agency says:

The adoption of existing AI applications in end-use sectors could lead to 1400 Mt of CO2 emissions reductions in 2035 in the Widespread Adoption Case. This does not include any breakthrough discoveries that may emerge thanks to AI in the next decade. These potential emissions reductions, if realized, would be three times larger than the total data centre emissions in the Lift-off Case, and four times larger than those in the Base Case.

So in a world where we adopt AI in more parts of the economy faster, it looks like on net it will prevent more total emissions. If we achieve the high adoption situation the IEA describes, it will be on net reduce global emissions by 1100 Mt of CO2 each year. That’s the current total yearly emissions of all global shipping and aviation. Seems good!

I don’t want people to get these 2 beliefs above mixed up. I am certain that your personal chatbot prompts don’t meaningfully harm the environment or increase your personal carbon footprint. I’m pretty uncertain about AI’s environmental impacts, but most of what I do know about it pushes me in the direction of thinking it won’t be a major problem, and will likely be net good for the climate. I’m not trying to mix these two beliefs. You can (and should) agree with me on the first, without agreeing with me at all on the second. There are so many other variables and open questions that the first doesn’t at all imply the second.

Conclusion

You should be wary of anyone saying that an article going into details on one specific environmental issue with AI is “painting an incomplete picture” of AI’s environmental impact, because painting that full picture would require crazy amounts of very detailed writing. We need to be able to do deep dives on each of these issues individually without being told off for not including every other possible environmental issue along with it.

Great word

the hard green environmentalists ought to be reading you. great post that strikes a balance between pros and cons, addresses the uncertainties without turning preachy or into histrionics

I find your writing and approach on this subject so incredibly useful (and clear!) Thank you.